Drawing a spectrum is not the most stupid thing a computer can do. Actually it requires a lot of patience and dedication, both for a programmer and a user. All the computer has to do, in the case of a 1D spectrum, is to connect adjacent points. They are also equally spaced, along the x axis. Where's the complication? Furthermore, little has changed in the last 20 years. The only change I notice is that there are less plotters around today, which means more room in the lab, less machines to maintain and less drawing routines into the program.

Unfortunately computers seems to follow the Murphy's law: they always find a way to complicate my life. I told you that I installed Jeol Delta, courtesy of Jeol, but it's too difficult for me to use. It draws spectra wonderfully, into a small pane, and I don't know how to enlarge it (I'd like to see the plot as large as my monitor). That's not a big issue, because Delta would still remain too complicated for me to use it. I also have Mnova, and I know how to use it. Mnova can draw a plot as large as the window, but it's painfully slow. I have tried to modify the settings, and actually the situation improves a lot with the option "Entire Page" and the pen width = 1. Even after this optimization, Mnova still remains slower than Delta. With the latter I can continuously change the intensity of the spectrum, and the response is fluid. Mnova reacts very slowly, instead, showing a flashing watch cursor, whenever I roll the scrolling wheel. Surprisingly, the manual phase correction appears more fluid (the computer is doing the same things plus additional processing, yet it takes less time! why?). In conclusion, I have discovered how to make the plot as large as the window, but it's impossibly slow, therefore I have switched to the "Entire Page" mode, which is uncomfortably slow.

For me the drawing speed is fundamental, because I navigate a lot through my spectra and want the computer to be as fast as my hands. There are, also, economical and psychological motivations: I expect any new combination computer+software to be as fast as its predecessor. I assume that the fault is mine or of my computer, but what can be good news for you is bad news for me (if the problem was more generalized, I had some hope to find some help some where). Don't worry about me, I also have a third program that combines the largest size with the fastest speed; I dubbed it "the ultimate NMR experience".

Today's graphic cards can be faster than the rest of the machine, but only when they like, and they have never learned how to draw a 1D plot. The single points of the spectrum are connected by the program, not by the graphic card. Not so bad: when the program takes care of the humblest task, it also has more control over them.

The user can do very little, apart from verifying the limits of the software in use. You should verify 3 things:

1) Does the program draw fast enough? If the answer is NO, I would reduce the size of the FT anytime I can.

2) Is the plot faithful to the spectrum? The answer is YES in the case of the above programs, but the situation is often worse. If you disable the antialiasing (or when it's already absent) you can see shoulders when they don't exist and singlets with an apparent trace of splitting on their tops.

Those small couplinga are not due to a neighbor nucleus, but are a courtesy of the graphic card (or of an ignorant programmer who can't take advantage of today's graphic cards).

3) Is the spectrum synchronized with the scale? This is something difficult to verify. You really need to master the software to verify such a detail. You can do it indirectly, for example when you create a view with two or more spectra superimposed. If all the spectra are perfectly aligned at all magnification levels, and if you read consistent frequency values from the scales and the cross-hair tools, then all is OK.

It's not enough that all the drawing routines are aligned, they must also be CENTER-aligned. What's more difficult to realize is that they must be INTRINSICALLY center-aligned. This apparently futile consideration is at the heart of a successful alignment. Let's take for example the same spectrum, acquired from 0 to 64 ppm and processed twice. Let's make the FT size = 32 points the first time and 64 points the second time. If you believe that a point can't have an intrinsic size, you'll make them fall at 0, 2, 4, ... 62 ppm in the first case and at 0, 1, 2, ... 63 ppm in the second case. The first point of the first spectrum, falling at 0, corresponds to the first pair of points of the second spectrum, centered at 0.5 ppm. As you can see, the spectra are not aligned, there's a difference of 0.5 ppm. If, instead, you conceptualize the point like a nucleus immersed in the MIDDLE of its electronic cloud, then the points of the first spectrum fall at 1, 3, 5, ... 63 and the points of the second spectrum at 0.5, 1.5, 2.5, ... 63.5. Now they are perfectly aligned, as you can easily verify.

You must know the above things from the start, even when your needs are limited to a single spectrum per plot. In conclusion, no point can ever fall on the boundary of the spectral width. If it does, the program is not able to always align the spectra. If the above discussion seems purely academic, try aligning a 2D plot with a 1D spectrum... if it's simple, praise the programmer!

After multiplication with a negative exponential (2 Hz) this spectrum becomes:

After multiplication with a negative exponential (2 Hz) this spectrum becomes:

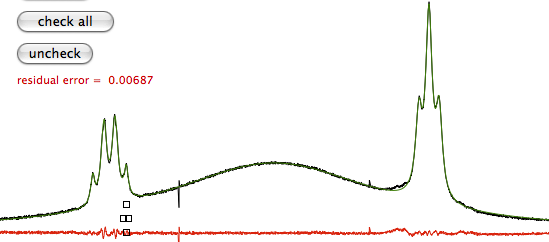

The tails of NMR peaks are much larger than you may think. The components of a multiplet are often fused into a single group. In this case, the apparent distance of two positive Lorentzian curves is less than the actual distance. I can attenuate the tails and obtain a complete separation of the above signals with a positive exponential (1.6 Hz) and a gaussian (1.2 Hz).

The tails of NMR peaks are much larger than you may think. The components of a multiplet are often fused into a single group. In this case, the apparent distance of two positive Lorentzian curves is less than the actual distance. I can attenuate the tails and obtain a complete separation of the above signals with a positive exponential (1.6 Hz) and a gaussian (1.2 Hz).

When a spectrum is in magnitude or power representation, you have a mix of absorption and dispersion curves. The latter contribute with large and nasty tails. I have attenuated them with a sine bell shifted by 6º.

When a spectrum is in magnitude or power representation, you have a mix of absorption and dispersion curves. The latter contribute with large and nasty tails. I have attenuated them with a sine bell shifted by 6º.

This synthetic FID does not decay. I can attenuate the wiggles (without generating tails) with a cosine squared function.

This synthetic FID does not decay. I can attenuate the wiggles (without generating tails) with a cosine squared function.