What's your position and where are you working?

For the past 5 years I have worked as a lecturer in Biological Chemistry in the section of Biomolecular Medicine at Imperial College London.

Where have you been working before?

Before this, I post-doc’ed at Cambridge; before that, UC Davis; and before that, for my first post-doc, at Imperial College again.

Briefly describe your research.

I am interested in metabolism in invertebrate and microbial species, and how this is involved in several different biological questions. Some of the projects I currently work on include microbial virulence and pathogenesis; how metabolism is affected by problems with recombinant protein folding in the bioprocessing yeast Pichia pastoris; and using earthworms as biomonitors of environmental pollution.

What do you use NMR for?

Together with mass spectrometry, I use NMR for metabolite profiling, as part of the technology for metabolomic studies. Although it’s generally less sensitive than mass spec, NMR still has a very useful role – as a near-universal and robust detector, it can give a quick and information-rich spectral profile. Most of the work we do, we just use 1D NMR for profiling; particularly useful for studies where you want to process as many samples as possible. However there are also other cases where more in-depth NMR experiments are needed, for example for isotopomer analysis to investigate metabolic fluxes; or to assign novel metabolites (essentially a natural products chemistry problem).

Which NMR software are you using?

XWIN-NMR and Topspin for data acquisition; iNMR for all NMR processing. I also use Chenomx NMR Suite for helping assign and quantitate metabolites in NMR spectra.

Which other NMR software have you used in the past?

I’ve also used VNMR and ACDLabs NMR software, and MestreC (before it was released as a commercial product).

How do you rate iNMR?

iNMR is not only my favourite NMR software for Mac OS (I’m not sure how many competitors there are at the moment), but it’s easily my favourite NMR software full stop. It’s one of a handful of Mac-only packages that I use all the time as part of my regular working day (others include Papers, Aabel, and Bookends). Features that I particularly like include the Overlay Manager (which makes it by far the quickest and easiest NMR software to use for comparing multiple spectra, in my opinion), and also the overall simplicity of using it to produce quality spectral images that can go straight into a paper or presentation without having to use multiple machines or virtualization. I admit I wasn’t really bothered by lack of anti-aliasing on spectra before using iNMR, but now I’m used to it, I do find it genuinely annoys me when looking at spectra in Topspin, say – it’s distinctly harder to see fine detail without zooming in. It’s also elegant and quick – not essential properties for software, but makes it more enjoyable to use on a regular basis.

Is it enough for your needs?

Well, it’s certainly enough for my needs in the sense that all of the spectra that I acquire are processed with iNMR – so in one sense, yes, almost by definition. It’s not 100% perfect though, there are still some small issues that could be ironed out in future releases – and as I’ve already said, I do use Chenomx software for some complementary uses which iNMR isn’t primarily designed for. I definitely see it as a crucial part of my workflow for the foreseeable future though, and expect it will keep improving (although by now it’s a relatively mature product).

Wednesday, 2 December 2009

Hands on 3-D Processing

A measure of the computing power available today with a desktop computer is the possibility of processing huge spectra in real time. For example: is it possible to correct the phase of a 3-D matrix interactively, in real time? The answer is: yes and you don't even have to employ more than a single core nor to buy an high-end graphic card. When I mean interactive processing I mean that:

- you see a graphic representation of the matrix at each processing stage.

- you can play with it, for example change a parameter just to see the effect it has on the matrix.

It is always necessary to know and understand the mathematical rules that govern NMR processing. The more you know them the more you enjoy their visual representations. The more you play with the graphics, the more you understand the maths behind. The two things go together.

You can find a self-teaching course on basic 3-D processing on the iNMR web site. There is no theory, only a lot of examples and a good measure of practical tips.

- you see a graphic representation of the matrix at each processing stage.

- you can play with it, for example change a parameter just to see the effect it has on the matrix.

It is always necessary to know and understand the mathematical rules that govern NMR processing. The more you know them the more you enjoy their visual representations. The more you play with the graphics, the more you understand the maths behind. The two things go together.

You can find a self-teaching course on basic 3-D processing on the iNMR web site. There is no theory, only a lot of examples and a good measure of practical tips.

Thursday, 26 November 2009

Open Source NMR freeware

Most of the readers arrive here using Google, without knowing me and my blog. Usually they get very angry because they arrive... on the trapping post I wrote 3 years ago! I want to do something to keep them glad...

So you want "open source" stuff? Do you know what it really means? Are you ready to compile, test, debug it and add a graphic interface to it?

Just because you asked for it, here is a list of available projects. If you know other links, add them into a comment.

CCPN

NPK

matNMR

ProSpectND

Connjur

Newton-NMR

nmrproc

DOSY Toolbox

list of 30+ projects

So you want "open source" stuff? Do you know what it really means? Are you ready to compile, test, debug it and add a graphic interface to it?

Just because you asked for it, here is a list of available projects. If you know other links, add them into a comment.

CCPN

NPK

matNMR

ProSpectND

Connjur

Newton-NMR

nmrproc

DOSY Toolbox

list of 30+ projects

Monday, 16 November 2009

Tips

It rarely happens to find valuable tips about processing on the web. When it happens, it's probably not enough to bookmark the page (it may disappear), copying it is a better idea. Here is the link:

http://spin.niddk.nih.gov/NMRPipe/embo/

When you arrive there, scroll down until you find the chapter Some General Tips About Spectral Processing.

The focus is on multi-dimensional processing with NMRPipe, but a few concepts are generally applicable indeed.

The brief discussion about first-point pre-multiplication is something to bear in mind. You can also find clearly expressed opinions on zero-filling, linear prediction and baseline correction.

http://spin.niddk.nih.gov/NMRPipe/embo/

When you arrive there, scroll down until you find the chapter Some General Tips About Spectral Processing.

The focus is on multi-dimensional processing with NMRPipe, but a few concepts are generally applicable indeed.

The brief discussion about first-point pre-multiplication is something to bear in mind. You can also find clearly expressed opinions on zero-filling, linear prediction and baseline correction.

Saturday, 14 November 2009

Shadow

Here is a picture I have found on the internet. I have never been in this place, if this is what you want to hear. Suppose, instead, that I live just in front of this tower and tomorrow I take a photo of it immediately after dawn, then another photo after 25 hours and so on for a week, with regular intervals of 25h between pictures. Eventually I print all the pictures in order and ask you:

"This pictures have been taken in this exact order at regular intervals. Can you tell me how long the intervals were?".

Somebody will answer: "1 hour" and the answer would be partially correct. A more correct answer would be 1 + 24 n hours, with n = 0, 1, 2, 3….

The world of FT-NMR is similar. The difference is that the regular interval between consecutive observation is known in advance while the speed of the hands and of the shadow is unknown. In other words, it is the opposite of my example of the tower.

The equivalent of a day, in the world of FT-NMR, is very very short and is called dwell time. It forms a Fourier pair, so to speak, with the spectral width. The spectroscopist sets the former, the latter is a mere mathematical consequence.

The ignorant says that the spectral width is the distance from the first point of the spectrum to the last one. This statement is as incorrect as saying that a day is made of 23 hours! The spectral width, actually, is the distance from the first point to the first point after the last one!

Let's verify it with a numerical example. Let's say we have a spectrum of 1024 points, separated by 2 Hz. If we zero-fill the FID up to 2048 points, the distance should decreases to 1 Hz. The spectral width is 2048 Hz in both cases, and this is OK. If you measure it the other way, then you have a spectral width of 2046 Hz that grows up to 2047 Hz. This is absurd, because the value is fixed at acquisition time and can't be changed by processing.

The relation is Spectral_width = 1 / Dwell_time.

Many books report another formula: Spectral_width = 1 / (2 * Dwell_time). This assumes an instrument without quadrature detection, in other words with a single detector. I have never used such an instrument.

To be exact, my whole description is dated, because today's instruments work in oversampling, the actual dwell times are shorter than what the spectroscopist sets (4 or 8 times the value reported), the FID that we see is already the result of a couple of FT (first direct, then inverse), et cetera. Seems complicated but it is not. We see what we need to see, the complications are hidden.

The important concept to remember is that the components of a digital spectrum, even if they are called "points", should be treated and conceptualized as tiles. There is no room in between. This idea will help you when you'll try to save a spectrum as a table of intensity vs. frequency. You will get the correct frequency value of each "point".

Wednesday, 21 October 2009

Trigonometry for Dummies

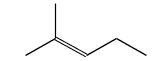

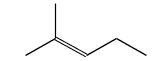

This is a doublet of doublets. If you move one doublet towards the other, for example by increasing the smaller J, the central peaks will coalesce into a single peak of double intensity (the algebraic sum of the central peaks).

A triplet is a special case of doublet of doublets (the Js are identical).

Another doublet of doublets. This time the smaller coupling is anti-phase.

If we increase the small J, we'll see another algebraic addition.

This is a triplet. Right?

Tuesday, 20 October 2009

NMR for Dummies

Here is where the real fun begins. I was not joking with my previous post. You can really do these things at home. The simple instructions are available on another site, and they are illustrated. After completing the tutorial (it takes 5 minutes in total) a working application will remain in your hard disc, and you will be free to apply the same treatment to your own spectra.

iNMR has always been available as a free download (since 2005). Access is unlimited and the program never expires. Other specialized simulation modules are included to cover most of the needs of an advanced spectroscopist (the few exceptions are motivated by the fact that other needs were already covered by existing freeware).

My blog is three years old. Half of the comments have been dedicated to a single post, which is clearly (and purposely) the least representative of the blog. When people find something useful (and this is certainly the case today) and free, they don't comment.

If you are not going to comment, I will comment by myself.

COMMENT: although it's a serious work, although it contains a lot of maths (and maybe just for this reason) it's funny too.

iNMR has always been available as a free download (since 2005). Access is unlimited and the program never expires. Other specialized simulation modules are included to cover most of the needs of an advanced spectroscopist (the few exceptions are motivated by the fact that other needs were already covered by existing freeware).

My blog is three years old. Half of the comments have been dedicated to a single post, which is clearly (and purposely) the least representative of the blog. When people find something useful (and this is certainly the case today) and free, they don't comment.

If you are not going to comment, I will comment by myself.

COMMENT: although it's a serious work, although it contains a lot of maths (and maybe just for this reason) it's funny too.

Friday, 16 October 2009

Try this at home

Returning to the DQF-COSY of taxole, I have found this challenge:

I couldn't tell which kind of multiplet it was. I have extracted the section corresponding to the red line and moved it into my novel simulator. Then I have introduced 3 couplings and pushed the "Fit" button.

That's all. The goodness of the fit is convincing. It is a doublet of doublets of doublets and the Js are: 14.7, 6.4 and 9.9 Hz. The latter corresponds to an antiphase coupling. Not only I know which kind of multiplet it is, I have also measured the Js!

You might feel it's funny, I find it amazing, perhaps somebody will say it is useful. "The coupling constants were extracted from the DQF-COSY by the blogger's simulator".

I couldn't tell which kind of multiplet it was. I have extracted the section corresponding to the red line and moved it into my novel simulator. Then I have introduced 3 couplings and pushed the "Fit" button.

That's all. The goodness of the fit is convincing. It is a doublet of doublets of doublets and the Js are: 14.7, 6.4 and 9.9 Hz. The latter corresponds to an antiphase coupling. Not only I know which kind of multiplet it is, I have also measured the Js!

You might feel it's funny, I find it amazing, perhaps somebody will say it is useful. "The coupling constants were extracted from the DQF-COSY by the blogger's simulator".

Digitization

I am continuing my explorations on board of my new multiplet simulator. It has been easy to simulate an asymmetric doublet. Here the asymmetry is only apparent and is due to the limited digitization.

As explained yesterday, the black line belongs to an experimental 2-D spectrum (today I have chosen a TOCSY), the red line is a theoretical doublet acquired and processed under the same conditions.

My simulator allows me to change the frequency by dragging the blue label at the bottom.

In this way the coupling remains constant. It turns out that my spectrum was a middle-range case. With a slight increase of the chemical shift I can obtain a symmetric doublet, or even a singlet!

As explained yesterday, the black line belongs to an experimental 2-D spectrum (today I have chosen a TOCSY), the red line is a theoretical doublet acquired and processed under the same conditions.

My simulator allows me to change the frequency by dragging the blue label at the bottom.

In this way the coupling remains constant. It turns out that my spectrum was a middle-range case. With a slight increase of the chemical shift I can obtain a symmetric doublet, or even a singlet!

Thursday, 15 October 2009

Natural Products

A reader asked me: "What is the shape of a 2-D peak?" and my answer was: "Exponential! (in time domain)". This is all you need to know, I think. The picture should help, as always. If you are reading this blog, it means that you have something like a computer. Even the iPhone is a computer (you can run programs on it) and can display my blog. I suspect, however, that you have a better computer, like I do.

You can use your computer to run simulations and more: you can ask it to fit a model spectrum to an experimental spectrum. This is what I have done. The black line is a (fragment of a projection of a) DQF-COSY. The red line is a model generated by the computer. All I said was:

1) the signal has an exponential decay;

2) it's an anti-phase doublet;

3) here is an experimental spectrum and here are its processing parameters;

4) please fit the model to my spectrum!

The goal was a better estimate of the coupling constant. The distance between the two peaks is 7.3 Hz, while the model contains a J = 8.88 Hz. My impression is that the model is more accurate by 1.6 Hz.

The calculation involved is very little (the whole process was faster than the blinking of an eye). The job of programming was more time-consuming. If you don't like spending time, find someone else that can do the job for you. They ask 0.99$ (0.79€) for an application (for the iPhone). Calculating the Js with more accuracy is certainly worth the price.

There is no secret, no new theory, should you need more explanations please ask.

If you want to know more, I can try to explain what was behind the question of the reader. It's not necessary, it's an un-necessary complication, but it's also well-know theory. If you submit to FT a function (e.g.: an exponential decay) you get a different function (in the example, a Lorentzian). They form a "Fourier pair". Being that most 2-D spectra are weighted with a squared cosine-bell my friend probably wanted to know which other function it makes a pair with.

Now you can realize that it is more a theoretical question than a practical one: if the final goal is to simulate an NMR spectrum, it's enough to know that the signal decays exponentially (and everybody knows it).

The program shown by the picture can simulate a multiplet (with 3 different J values). The multiplicities that can be chosen from the menus are:

but it's trivial to extent the menu and handle quintets and so on. Even without further modifications, you can already simulate a quartet of triplets of doublets."R" is the inverse of the transversal relaxation time. This kind of program can't simulate second-order spectra, but nothing prevents you from writing a program that simulates second-order spectra in time domain.

What happens when the user pushes the "Fit" button? The computer runs a Levenberg-Marquardt optimization, based on first derivatives. How have I found the derivatives without knowing the function that describes a peak? You know: life is simpler when you have a computer...

Monday, 28 September 2009

interviews

Here is the complete list of my interviews:

Kevin Theisen

Stefano Antoniutti

Arthur Roberts

Antonio Randazzo

Bernhard Jaun

Daniel J. Weix

Jacek Stawinski

Stefano Ciurli

Jake Bundy

If you like to be interviewed, please send both questions and answers via email (or as a comment here).

The next Euromar conference will be held in Florence from July 4 to July 9 2010. I have no intention to attend but I want to be there. If you plan to attend, contact me via email or phone and let's arrange a meeting. I would be delighted to know you in person.

Kevin Theisen

Stefano Antoniutti

Arthur Roberts

Antonio Randazzo

Bernhard Jaun

Daniel J. Weix

Jacek Stawinski

Stefano Ciurli

Jake Bundy

If you like to be interviewed, please send both questions and answers via email (or as a comment here).

The next Euromar conference will be held in Florence from July 4 to July 9 2010. I have no intention to attend but I want to be there. If you plan to attend, contact me via email or phone and let's arrange a meeting. I would be delighted to know you in person.

Tuesday, 18 August 2009

Stefano Ciurli

Q. Please introduce yourself to the readers of the blog.

A. I am a full professor of general and inorganic chemistry at the University of Bologna (Italy).

I received my M.Sc. from the University of Pisa with a thesis done at Columbia University (NY), received my Ph.D. in chemistry from Harvard University, and done a post-doctoral work at the NMR center in Florence.

I work on the structural biology and biological chemistry of metalloproteins. After several years of work on electron transfer proteins containing Fe and Cu, I have been spending the last ten years working on the biochemistry of nickel.

I use NMR mainly for processing and visualization of 1D, 2D, and 3D NMR spectra of proteins prior to go on and use other programs for more dedicated tasks of spectral signal assignment.

Q. Which NMR software are you using?

A. The first choice is iNMR, for its amazing speed and flexibility, especially for 3D spectra. Easy to use and great performance. I use MestreNova for teaching purposes (mainly because of lack of Mac computers among the students, otherwise that would be perfect, considering the simulation modules etc.), and NMRPipe for other different tasks more dedicated to protein NMR.

Q. Is iNMR enough for your needs?

A. I like to use both iNMR and NMRPipe, having iNMR as the first choice and leaving NMRPipe for more specialized work.

A. I am a full professor of general and inorganic chemistry at the University of Bologna (Italy).

I received my M.Sc. from the University of Pisa with a thesis done at Columbia University (NY), received my Ph.D. in chemistry from Harvard University, and done a post-doctoral work at the NMR center in Florence.

I work on the structural biology and biological chemistry of metalloproteins. After several years of work on electron transfer proteins containing Fe and Cu, I have been spending the last ten years working on the biochemistry of nickel.

I use NMR mainly for processing and visualization of 1D, 2D, and 3D NMR spectra of proteins prior to go on and use other programs for more dedicated tasks of spectral signal assignment.

Q. Which NMR software are you using?

A. The first choice is iNMR, for its amazing speed and flexibility, especially for 3D spectra. Easy to use and great performance. I use MestreNova for teaching purposes (mainly because of lack of Mac computers among the students, otherwise that would be perfect, considering the simulation modules etc.), and NMRPipe for other different tasks more dedicated to protein NMR.

Q. Is iNMR enough for your needs?

A. I like to use both iNMR and NMRPipe, having iNMR as the first choice and leaving NMRPipe for more specialized work.

Friday, 24 July 2009

QR-DOSY

At the beginning of the month we saw that there are cases where the diffusion coefficients can be measured with the same mathematical tools used to measure relaxation. We have seen DOSY spectra of pure compounds where each signal decays as a pure exponential. Even a mixture can behave in the same way, if the signals don't overlap. In summary, calculating the diffusion coefficients is often easy.

What I was curious to discover was: is it possible to recalculate the components of a mixture if the diffusion coeffients are known? From a pure mathematical point of view the answer was already: "yes", but I wanted to verify it in practice.

As far as I know, something like: "QR-DOSY" has never been mentioned. So many DOSY methods already exist and I don't mind to increase the Babel with yet another acronym. Do you?

THEORY

If we know the diffusion coefficient D(j) we can calculate that the NMR signal in the spectrum i will be proportional to a value A(i,j) = exp(-D(j)F(i)) where F is a function of the gyromagnetic ratio, the gradient strength, the diffusion delay, etc.. but not a function of the chemical shift nor of the diffusion coefficient.

For each column of the DOSY spectrum we have a system of equations: Ax = b.

x = intensity of the spectrum of the pure compounds at the chemical shift that coresponds to the given column.

b = intensity of the DOSY along the same column.

We know A and b, therefore we can calculate x. A is the same for all the columns and this is a great advantage. We can apply a well known decomposition: Rx = Qb.

Calculating Q and R from A takes time, but we need to do it only once. Then then computer can swiftly solve all the systems in the form Rx = Qb.

METHODS

Obviously, we will find that some values of the x will be negative. In such a case, we can choose a subset of A (omitting the component with negative intensity) and solve the reduced problem. This simplifying mechanism can be applied iteratively, even when the value of x is positive yet small.

RESULTS

This is the same old spectrum we are familiar with, processed with the new QR-DOSY.

DISCUSSION

Two components are completely separated. The third component is not, although at this point it becomes easy to recognize its peaks. Other cases I have studied yield similar results, maybe not as nice. Advantages of the QR-DOSY method:

- easy to understand;

- easy to use WITH THE ASSISTANCE of a software for the book-keeping activity (like measuring the diffusion coefficients);

- fast;

- the user can play with a few parameters, trying to improve the results;

- the final spectra are clean from artifacts.

Cons:

- not all the components are always resolved;

- it's a problem if two diffusion coefficients are similar (of course the program itself can easily detect this circumstance).

What I was curious to discover was: is it possible to recalculate the components of a mixture if the diffusion coeffients are known? From a pure mathematical point of view the answer was already: "yes", but I wanted to verify it in practice.

As far as I know, something like: "QR-DOSY" has never been mentioned. So many DOSY methods already exist and I don't mind to increase the Babel with yet another acronym. Do you?

THEORY

If we know the diffusion coefficient D(j) we can calculate that the NMR signal in the spectrum i will be proportional to a value A(i,j) = exp(-D(j)F(i)) where F is a function of the gyromagnetic ratio, the gradient strength, the diffusion delay, etc.. but not a function of the chemical shift nor of the diffusion coefficient.

For each column of the DOSY spectrum we have a system of equations: Ax = b.

x = intensity of the spectrum of the pure compounds at the chemical shift that coresponds to the given column.

b = intensity of the DOSY along the same column.

We know A and b, therefore we can calculate x. A is the same for all the columns and this is a great advantage. We can apply a well known decomposition: Rx = Qb.

Calculating Q and R from A takes time, but we need to do it only once. Then then computer can swiftly solve all the systems in the form Rx = Qb.

METHODS

Obviously, we will find that some values of the x will be negative. In such a case, we can choose a subset of A (omitting the component with negative intensity) and solve the reduced problem. This simplifying mechanism can be applied iteratively, even when the value of x is positive yet small.

RESULTS

This is the same old spectrum we are familiar with, processed with the new QR-DOSY.

DISCUSSION

Two components are completely separated. The third component is not, although at this point it becomes easy to recognize its peaks. Other cases I have studied yield similar results, maybe not as nice. Advantages of the QR-DOSY method:

- easy to understand;

- easy to use WITH THE ASSISTANCE of a software for the book-keeping activity (like measuring the diffusion coefficients);

- fast;

- the user can play with a few parameters, trying to improve the results;

- the final spectra are clean from artifacts.

Cons:

- not all the components are always resolved;

- it's a problem if two diffusion coefficients are similar (of course the program itself can easily detect this circumstance).

Tuesday, 14 July 2009

Jacek Stawinski

Q. What's your position and where are you working?

A. I am Professor of Organic Chemistry at Stockholm University, Stockholm, Sweden, and at the Institute of Bioorganic Chemistry, Polish Academy of Science, Poznan, Poland.

Q. Where have you been working before?

A. Adam Mickiewicz University, Poznan, Poland, and Institute of Organic Chemistry, Polish Academy of Science, Warsaw, Poland

Q. Briefly describe your research.

A. My field of expertise is bioorganic phosphorus chemistry, nucleic acids chemistry, and lipid and phospholipid chemistry (http://www.organ.su.se/js).

Q. What do you use NMR for?

A. Characterization of synthetic intermediates, structure determination, spin simulations, NMR dynamic processes.

Q. Which NMR software are you using now?

iNMR, the latest version.

Q. Which other NMR software have you used in the past?

A. Swan NMR, Topspin, MestreC, MNOVA

Q. How do you rate iNMR?

A. iNMR is superior, by far, to all NMR software I have used. It provides a powerful, intuitive and professional environment for processing and plotting NMR data. The software is very fast, has scripting ability, and a lot of keyboard shortcuts and useful extras. No doubts, iNMR is right on the cutting edge on the NMR processing software development. Due to simple interface, iNMR is a user friendly application, but it hides a lot of powerful tools for advanced tasks. And last, but not least, the support from the programmer is prompt, competent, and friendly.

Q. Is it enough for your needs?

A. I have never faced a situation when iNMR could not do, what other software can. For me, it is the tool of choice for dynamic NMR. Also very useful during teaching NMR courses.

A. I am Professor of Organic Chemistry at Stockholm University, Stockholm, Sweden, and at the Institute of Bioorganic Chemistry, Polish Academy of Science, Poznan, Poland.

Q. Where have you been working before?

A. Adam Mickiewicz University, Poznan, Poland, and Institute of Organic Chemistry, Polish Academy of Science, Warsaw, Poland

Q. Briefly describe your research.

A. My field of expertise is bioorganic phosphorus chemistry, nucleic acids chemistry, and lipid and phospholipid chemistry (http://www.organ.su.se/js).

Q. What do you use NMR for?

A. Characterization of synthetic intermediates, structure determination, spin simulations, NMR dynamic processes.

Q. Which NMR software are you using now?

iNMR, the latest version.

Q. Which other NMR software have you used in the past?

A. Swan NMR, Topspin, MestreC, MNOVA

Q. How do you rate iNMR?

A. iNMR is superior, by far, to all NMR software I have used. It provides a powerful, intuitive and professional environment for processing and plotting NMR data. The software is very fast, has scripting ability, and a lot of keyboard shortcuts and useful extras. No doubts, iNMR is right on the cutting edge on the NMR processing software development. Due to simple interface, iNMR is a user friendly application, but it hides a lot of powerful tools for advanced tasks. And last, but not least, the support from the programmer is prompt, competent, and friendly.

Q. Is it enough for your needs?

A. I have never faced a situation when iNMR could not do, what other software can. For me, it is the tool of choice for dynamic NMR. Also very useful during teaching NMR courses.

Thursday, 9 July 2009

Bull's-Eyes

Here are some caffeine peaks (below) and EthoxyEthanol peaks (above). The display is DOSY-like, yet the numerical treatment is a simpler and faster mono-exponential fit. Each column has been processed independently from the rest, and each column yields a different result:

Now let's apply a Whittaker Smoother, with a small value of lambda (100), along each ROW:

or a big lambda (500):

or a huge value (8000):

The smoother averages the results obtained from the different columns. The peaks are perfectly aligned.

Now let's apply a Whittaker Smoother, with a small value of lambda (100), along each ROW:

or a big lambda (500):

or a huge value (8000):

The smoother averages the results obtained from the different columns. The peaks are perfectly aligned.

Wandering

Jean Marc Nuzillard writes:

I agree with the idea. Actually I had arrived at the same conclusion for a different reason. Look at this spectrum (a DOSY after FT):

The signal/noise ratio is low yet acceptable, the compound is pure, the decay mono-exponential. No concern about phase and baseline. Alas, this peak is too nasty for my tastes. The frequency is not constant over time. The "trivial" trick by Jean-Marc should work. I give my preference to binning, because the output of binning is not a numerical table (like with integration) but a new spectrum, so the same NMR program can be used for the subsequent exponential fit.

The signal/noise ratio is low yet acceptable, the compound is pure, the decay mono-exponential. No concern about phase and baseline. Alas, this peak is too nasty for my tastes. The frequency is not constant over time. The "trivial" trick by Jean-Marc should work. I give my preference to binning, because the output of binning is not a numerical table (like with integration) but a new spectrum, so the same NMR program can be used for the subsequent exponential fit.

Before Carlos corrects me, let me stress a few details. We have processed the same experiment with two different algorithms. I got the butterflies and I am not proud of it. Carlos' pictures are a little smaller and cannot be compared:

(taken from his blog). Anyway, we are really confusing the matter here. From what I understand, the purpose of Carlos was to show how Bayesian DOSY can effectively separate the components of a mixture. I can't express any opinion, because as I said I only have two experiments to work with. In both cases there is no superposition of peaks, therefore there is nothing to separate. I normally like simple examples like these, yet I acknowledge they are not enough.

(taken from his blog). Anyway, we are really confusing the matter here. From what I understand, the purpose of Carlos was to show how Bayesian DOSY can effectively separate the components of a mixture. I can't express any opinion, because as I said I only have two experiments to work with. In both cases there is no superposition of peaks, therefore there is nothing to separate. I normally like simple examples like these, yet I acknowledge they are not enough.

Today I could repeat the same processing of Carlos, because he himself has very kindly given me both the raw data and the software. But 1) I am not terribly interested into this comparison 2) He doesn't give me these things for free for me to criticize him 3) If I really want to do such a thing I'll post a comment directly on his blog.

Now let's go on: ho to make the butterflies go away? The next post shows how to transform a butterfly into bull's-eyes.

The example that is provided by Carlos in his blog would benefit from a processing trick I use when there is no multiplet superimposition:

I simply integrate the multiplets that are recorded at different gradient intensities and I perform a monoexponential fit on integral values.

I suspect the noise on integral values is lower than the one in individual columns of the 1D spectra set, thus making D values more accurate.

Bye bye butterflies. This idea is absolutely trivial but maybe it would be interesting

to implement it.

I agree with the idea. Actually I had arrived at the same conclusion for a different reason. Look at this spectrum (a DOSY after FT):

The signal/noise ratio is low yet acceptable, the compound is pure, the decay mono-exponential. No concern about phase and baseline. Alas, this peak is too nasty for my tastes. The frequency is not constant over time. The "trivial" trick by Jean-Marc should work. I give my preference to binning, because the output of binning is not a numerical table (like with integration) but a new spectrum, so the same NMR program can be used for the subsequent exponential fit.

The signal/noise ratio is low yet acceptable, the compound is pure, the decay mono-exponential. No concern about phase and baseline. Alas, this peak is too nasty for my tastes. The frequency is not constant over time. The "trivial" trick by Jean-Marc should work. I give my preference to binning, because the output of binning is not a numerical table (like with integration) but a new spectrum, so the same NMR program can be used for the subsequent exponential fit. Before Carlos corrects me, let me stress a few details. We have processed the same experiment with two different algorithms. I got the butterflies and I am not proud of it. Carlos' pictures are a little smaller and cannot be compared:

(taken from his blog). Anyway, we are really confusing the matter here. From what I understand, the purpose of Carlos was to show how Bayesian DOSY can effectively separate the components of a mixture. I can't express any opinion, because as I said I only have two experiments to work with. In both cases there is no superposition of peaks, therefore there is nothing to separate. I normally like simple examples like these, yet I acknowledge they are not enough.

(taken from his blog). Anyway, we are really confusing the matter here. From what I understand, the purpose of Carlos was to show how Bayesian DOSY can effectively separate the components of a mixture. I can't express any opinion, because as I said I only have two experiments to work with. In both cases there is no superposition of peaks, therefore there is nothing to separate. I normally like simple examples like these, yet I acknowledge they are not enough.Today I could repeat the same processing of Carlos, because he himself has very kindly given me both the raw data and the software. But 1) I am not terribly interested into this comparison 2) He doesn't give me these things for free for me to criticize him 3) If I really want to do such a thing I'll post a comment directly on his blog.

Now let's go on: ho to make the butterflies go away? The next post shows how to transform a butterfly into bull's-eyes.

Pictures

In my fourth year of blogging I have started publishing pictures of spectra. I like my pictures, yet this is not the point. I don't want to convince you that my pictures are beautiful. I want to convince you that I am a spectroscopist and not a programmer.

The programmers have always stated that "in the near future" it will be possible to obtain a completely automatic analysis: from the sample directly to the response (meaning a chemical formula or a list of values), by-passing the plotted spectrum. I have found the same concept and expression "near future", scattered in the literature of all the decades, from the 60s onward.

I really believe it: someday in the future we'll arrive at the completely automatic analysis.

Being that, at this writing time, I am working as a programmer and not as a spectroscopist, I should adhere to this belief and be happy. It happens, instead, that I always think like a spectroscopist scared of remaining unemployed. I really hate to design and write programs for automatic processing and reporting. I like creating programs to display the spectra.

Here comes the difference between "diffusion" and "DOSY". The mere idea of DOSY is to make a troble-free program: push this button and you'll have everything, the diffusion coefficients and the individual components of the mixture. This is what I have understood up to now. In my whole life I have only worked with 2 DOSY experiments, which have not been carried out by me. I have already shown the first one and today I am going to show the second one (please wait a few minutes..).

The programmers have always stated that "in the near future" it will be possible to obtain a completely automatic analysis: from the sample directly to the response (meaning a chemical formula or a list of values), by-passing the plotted spectrum. I have found the same concept and expression "near future", scattered in the literature of all the decades, from the 60s onward.

I really believe it: someday in the future we'll arrive at the completely automatic analysis.

Being that, at this writing time, I am working as a programmer and not as a spectroscopist, I should adhere to this belief and be happy. It happens, instead, that I always think like a spectroscopist scared of remaining unemployed. I really hate to design and write programs for automatic processing and reporting. I like creating programs to display the spectra.

Here comes the difference between "diffusion" and "DOSY". The mere idea of DOSY is to make a troble-free program: push this button and you'll have everything, the diffusion coefficients and the individual components of the mixture. This is what I have understood up to now. In my whole life I have only worked with 2 DOSY experiments, which have not been carried out by me. I have already shown the first one and today I am going to show the second one (please wait a few minutes..).

Saturday, 4 July 2009

Ghost of a Butterfly

Butterflies

Carlos kindly gave me a copy of the DOSY spectrum he showed on his blog last year.

I am experimenting alternative processing routes. Here is a detail of the caffeine peaks, after applying the rudest (and probably simplest) treatment. The decays have been linearized (by taking the logarithm). The slope of the line is proportional to the diffusion coefficient. The final results are reported as a normal DOSY spectrum.

Click on the thumbnail to see the image at natural scale.

There is less signal/noise in the tails of the peaks, obviously, therefore the error increases: graphically we see the wings of a butterfly.

Being that it's impossible to correct the phase perfectly, some butterflies are asymmetric.

I am experimenting alternative processing routes. Here is a detail of the caffeine peaks, after applying the rudest (and probably simplest) treatment. The decays have been linearized (by taking the logarithm). The slope of the line is proportional to the diffusion coefficient. The final results are reported as a normal DOSY spectrum.

Click on the thumbnail to see the image at natural scale.

There is less signal/noise in the tails of the peaks, obviously, therefore the error increases: graphically we see the wings of a butterfly.

Being that it's impossible to correct the phase perfectly, some butterflies are asymmetric.

Friday, 12 June 2009

Daniel J. Weix

Q. What's your position and where are you working?

A. Assistant Professor, University of Rochester.

Q. Briefly describe your research.

A. Synthetic organic methodology, especially catalysis.

Q. What do you use NMR for?

A. Assaying purity of synthesized materials and identification of products (both organic and inorganic).

Q. Which NMR software are you using?

A. We use iNMR, latest version.

Q. Which other NMR software have you used in the past?

A. I have used MestreC, Bruker XWIN-PLOT, NUTS, MacNUTS (new version), and MestreNOVA.

Q. How do you rate iNMR?

A. I like iNMR better than all of the other software that I have used. The workflow is good and I'm already almost as fast as I was under NUTS (the fastest software of those mentioned above). It seems superior to MestreNOVA, with better keyboard shortcuts (MestreNOVA involved a lot of clicking) and a better user interface. New features are discoverable vs. having to dig into the manual. Importantly, my students like the software very much and they can be taught to use it in very short order.

Q. Is it enough for your needs?

A. More important to me than a list of features is the usability of the program. What good are features that sound nice, but are buggy or so round-about to use that they take more time than the result is worth? Can I get the results I need easily? Can we produce publication quality images? Is it fast? Two features that are often overlooked are the quality of the manual and the customer service of the company behind the software. In these areas iNMR excels. For us, iNMR is more than adequate!

A. Assistant Professor, University of Rochester.

Q. Briefly describe your research.

A. Synthetic organic methodology, especially catalysis.

Q. What do you use NMR for?

A. Assaying purity of synthesized materials and identification of products (both organic and inorganic).

Q. Which NMR software are you using?

A. We use iNMR, latest version.

Q. Which other NMR software have you used in the past?

A. I have used MestreC, Bruker XWIN-PLOT, NUTS, MacNUTS (new version), and MestreNOVA.

Q. How do you rate iNMR?

A. I like iNMR better than all of the other software that I have used. The workflow is good and I'm already almost as fast as I was under NUTS (the fastest software of those mentioned above). It seems superior to MestreNOVA, with better keyboard shortcuts (MestreNOVA involved a lot of clicking) and a better user interface. New features are discoverable vs. having to dig into the manual. Importantly, my students like the software very much and they can be taught to use it in very short order.

Q. Is it enough for your needs?

A. More important to me than a list of features is the usability of the program. What good are features that sound nice, but are buggy or so round-about to use that they take more time than the result is worth? Can I get the results I need easily? Can we produce publication quality images? Is it fast? Two features that are often overlooked are the quality of the manual and the customer service of the company behind the software. In these areas iNMR excels. For us, iNMR is more than adequate!

Wednesday, 10 June 2009

Bernhard Jaun

Q. What's your position and where are you working?

A. Professor in Organic Chemistry at ETH Zurich (Swiss Federal Institute of Technology). Head of the NMR labs.

Q. Where have you been working before?

A. Columbia University New York, then ETH Zurich for the last 29 years.

Q. Briefly describe your research.

A. Physical organic chemistry, in particular its application to biological questions.

Q. What do you use NMR for?

A. I am the head of NMR operations in an institute with more than 200 scientists. Most of them use NMR. The applications go from 3D solution structures of biopolymers to physical organic applications of NMR (such as host guest complexation, dynamic processes, thermodynamics and kinetics) to structure elucidation of novel natural compounds and (for the majority) characterization of synthetic intermediates.

Q. Which NMR software are you using now?

A. Topspin, VNMR, Mnova, iNMR, plus specialized software for 3D solution structure calculation such as XPLOR, SPARKY, CNS, DYANA, MARDIGRAS etc.

We have quite a large percentage of people using Macs (ca. 50%) in our institute.

Q. Which other NMR software have you used in the past?

A. SwaNMR for Mac OS9 and most of the software used for NMR over the last thirty years.

Q. How do you rate iNMR?

A. iNMR is a good and fast program which can do practically all of the work an NMR spectroscopist will ever need. Its strength lies in the flexibility and its more mathematical/physical approach to NMR such as beeing able to do all kinds of transforms, simulating dynamic exchange problems, analyzing spin systems etc. Clearly, the program is written by an NMR specialist for NMR spectroscopists.

The "weaknesses" are in the fact that iNMR is not as easy to learn as some other programs by people who do not know much about NMR and are only interested in getting "nice" plots and listings for their synthetic papers etc. Compared to other programs, iNMR uses only a fraction of icons and palettes but insiders can work very efficiently because of all the keyboard shortcuts and the scripting ability. The current versions still have a few bugs or inconveniences in the field of graphics, e.g. when it comes to plot 1D spectra at the border of 2Ds etc., axis adjustments when changing the window size etc. [Editor's note: this interview refers to the old version 3; the current version 4, made with the collaboration of prof. Jaun himself, solved all the above mentioned problems].

Maybe the best point about iNMR is that according to my experience, there is no other software where the programmer is so fast in responding to either bug reports or demands for new features. So, if I still sometimes get angry about a bug (or something I want to do but cant find out how) in iNMR, it is usually my own fault because I did'nt take the time to write to the author about it. If I had contacted the author, the problem would long be solved by now. Compare that to MS Office or the spectrometer manufacturers NMR programs!

Q. Is it enough for your needs?

A. We NMR spectroscopists have to accept that for a majority of the scientists in todays chemistry/biology research, NMR is a black box that's neverless - and "unfortunately"- absolutely necessary. They like to use software that seems to generate listings and plots without requiring knowledge by the operator. We still try to teach our own students about the innards of NMR-experiments. But the reality is that black-box attitude and the trend for automation are increasing all the time.

I think that in my domain of responsability with 200 scientists using NMR, it might actually be a good idea to start to write some scripts for iNMR that do all the standard processing for routine spectra. This might make iNMR more poular for all those, who are not really interested in the inner workings and just need a nice plot to show to their supervisor and who now rather use MNOVA for Mac because they think it is easier to use.

Also, I think that iNMR could become the tool of choice for all special and more physical things that can be done by NMR. In particular, there is only a very limited number of still living programs that can iteratively fit dynamic spectra from complicated exchanging spin systems. Other things I could think of are extracting coupling constants from 2Ds by simulation of cross peaks, analysing relaxation data, measuring residual dipolar couplings from heteronuclear 2D spectra, diffusion etc. etc.

A. Professor in Organic Chemistry at ETH Zurich (Swiss Federal Institute of Technology). Head of the NMR labs.

Q. Where have you been working before?

A. Columbia University New York, then ETH Zurich for the last 29 years.

Q. Briefly describe your research.

A. Physical organic chemistry, in particular its application to biological questions.

Q. What do you use NMR for?

A. I am the head of NMR operations in an institute with more than 200 scientists. Most of them use NMR. The applications go from 3D solution structures of biopolymers to physical organic applications of NMR (such as host guest complexation, dynamic processes, thermodynamics and kinetics) to structure elucidation of novel natural compounds and (for the majority) characterization of synthetic intermediates.

Q. Which NMR software are you using now?

A. Topspin, VNMR, Mnova, iNMR, plus specialized software for 3D solution structure calculation such as XPLOR, SPARKY, CNS, DYANA, MARDIGRAS etc.

We have quite a large percentage of people using Macs (ca. 50%) in our institute.

Q. Which other NMR software have you used in the past?

A. SwaNMR for Mac OS9 and most of the software used for NMR over the last thirty years.

Q. How do you rate iNMR?

A. iNMR is a good and fast program which can do practically all of the work an NMR spectroscopist will ever need. Its strength lies in the flexibility and its more mathematical/physical approach to NMR such as beeing able to do all kinds of transforms, simulating dynamic exchange problems, analyzing spin systems etc. Clearly, the program is written by an NMR specialist for NMR spectroscopists.

The "weaknesses" are in the fact that iNMR is not as easy to learn as some other programs by people who do not know much about NMR and are only interested in getting "nice" plots and listings for their synthetic papers etc. Compared to other programs, iNMR uses only a fraction of icons and palettes but insiders can work very efficiently because of all the keyboard shortcuts and the scripting ability. The current versions still have a few bugs or inconveniences in the field of graphics, e.g. when it comes to plot 1D spectra at the border of 2Ds etc., axis adjustments when changing the window size etc. [Editor's note: this interview refers to the old version 3; the current version 4, made with the collaboration of prof. Jaun himself, solved all the above mentioned problems].

Maybe the best point about iNMR is that according to my experience, there is no other software where the programmer is so fast in responding to either bug reports or demands for new features. So, if I still sometimes get angry about a bug (or something I want to do but cant find out how) in iNMR, it is usually my own fault because I did'nt take the time to write to the author about it. If I had contacted the author, the problem would long be solved by now. Compare that to MS Office or the spectrometer manufacturers NMR programs!

Q. Is it enough for your needs?

A. We NMR spectroscopists have to accept that for a majority of the scientists in todays chemistry/biology research, NMR is a black box that's neverless - and "unfortunately"- absolutely necessary. They like to use software that seems to generate listings and plots without requiring knowledge by the operator. We still try to teach our own students about the innards of NMR-experiments. But the reality is that black-box attitude and the trend for automation are increasing all the time.

I think that in my domain of responsability with 200 scientists using NMR, it might actually be a good idea to start to write some scripts for iNMR that do all the standard processing for routine spectra. This might make iNMR more poular for all those, who are not really interested in the inner workings and just need a nice plot to show to their supervisor and who now rather use MNOVA for Mac because they think it is easier to use.

Also, I think that iNMR could become the tool of choice for all special and more physical things that can be done by NMR. In particular, there is only a very limited number of still living programs that can iteratively fit dynamic spectra from complicated exchanging spin systems. Other things I could think of are extracting coupling constants from 2Ds by simulation of cross peaks, analysing relaxation data, measuring residual dipolar couplings from heteronuclear 2D spectra, diffusion etc. etc.

Tuesday, 9 June 2009

Antonio Randazzo

Q. What's your position and where are you working?

A. I am an Associate Professor at the Faculty of Pharmacy - University of Naples "Federico II"- Italy

Q. Where have you been working before?

A. I have worked also at The Scripps Research Institute (San Diego - California - USA) and at the Vanderbilt University (Nashville - Tennessee - USA)

Q. Briefly describe your research.

A. I have worked in the field of Bioactive Natural Product. I was in charge of the isolation and structural elucidation of new secondary metabolites from marine organisms. The characterization of the new compounds has been accomplished mainly by NMR. Then I moved to the structural study of protein by NMR. Currently I study unusual structures of DNA. In particular I study the structure of modified DNA quadruplex structures....always by means of NMR. I had the occasion to use NMR also in the field of food science.

Q. Which NMR software are you using now?

A. Currently I am using iNMR on two different machines: an iMAC and a brand new MAC PRO both running Leopard OS and both equipped with two monitors. I find really cool to display 2-3-4 spectra distributed between the two screens and using the recently developed "global cross" feature to display a synchronized cursor simultaneously in all spectra. In this way the assignment of whatever molecule become very simple even in the case of complex and overlapped spectra.

Q. Which other NMR software have you used in the past?

A. I have used many NMR softwares. However, I have used extensively Xeasy and Felix (Accelrys, San Diego USA).

Q. How do you rate iNMR?

A. Top score!

Q. Is it enough for your needs?

A. I find it an EXCELLENT software. I am impressed on the very high quality processing features and the very easy way to use it. It is fast and very user friendly. It satisfies completely all my needs and it is also affordable. Furthermore, It is great in commenting the spectra in order to get a nice pictures for scientific work or didactics. Moreover, the after sale assistance to the software is absolutely incomparable with other softwares. Each improvement I have asked for the software, it has been realized in hours!!!! The assistance is the best ever. I have not found anything like the iNMR assistance before in all my carrier. I definitively give to iNMR my strongest recommendation.

A. I am an Associate Professor at the Faculty of Pharmacy - University of Naples "Federico II"- Italy

Q. Where have you been working before?

A. I have worked also at The Scripps Research Institute (San Diego - California - USA) and at the Vanderbilt University (Nashville - Tennessee - USA)

Q. Briefly describe your research.

A. I have worked in the field of Bioactive Natural Product. I was in charge of the isolation and structural elucidation of new secondary metabolites from marine organisms. The characterization of the new compounds has been accomplished mainly by NMR. Then I moved to the structural study of protein by NMR. Currently I study unusual structures of DNA. In particular I study the structure of modified DNA quadruplex structures....always by means of NMR. I had the occasion to use NMR also in the field of food science.

Q. Which NMR software are you using now?

A. Currently I am using iNMR on two different machines: an iMAC and a brand new MAC PRO both running Leopard OS and both equipped with two monitors. I find really cool to display 2-3-4 spectra distributed between the two screens and using the recently developed "global cross" feature to display a synchronized cursor simultaneously in all spectra. In this way the assignment of whatever molecule become very simple even in the case of complex and overlapped spectra.

Q. Which other NMR software have you used in the past?

A. I have used many NMR softwares. However, I have used extensively Xeasy and Felix (Accelrys, San Diego USA).

Q. How do you rate iNMR?

A. Top score!

Q. Is it enough for your needs?

A. I find it an EXCELLENT software. I am impressed on the very high quality processing features and the very easy way to use it. It is fast and very user friendly. It satisfies completely all my needs and it is also affordable. Furthermore, It is great in commenting the spectra in order to get a nice pictures for scientific work or didactics. Moreover, the after sale assistance to the software is absolutely incomparable with other softwares. Each improvement I have asked for the software, it has been realized in hours!!!! The assistance is the best ever. I have not found anything like the iNMR assistance before in all my carrier. I definitively give to iNMR my strongest recommendation.

Monday, 8 June 2009

Arthur Roberts

Q. Please introduce yourself to the readers of the NMR software blog.

A. I am a project scientist at the School of Pharmacy at the University of California San Diego (UCSD). I was hired to bring some novel NMR technology that I developed at the University of Washington to UCSD.

I worked as a postdoctoral fellow at the Department of Medicinal Chemistry at the University of Washington and at Washington State University. I started my career as an EPR spectroscopist, where we built instruments. I have been doing NMR, since 2003.

Currently I study the process of drug metabolism, which happens to be the main road block for drug development. We hope that our research will lead to drugs of higher efficacy and fewer side effects. We are developing NMR technology that will allow us to rapidly determine drug bound structures and will speed drug development. We have developed a variety of NMR pulse programs for this purpose.

We do Paramagnetic protein NMR. We also do NMR simulations and write NMR pulse programs.

Q. Which NMR software are you using now?

A. Topspin 2.1 and iNMR 3.15.

Q. Which other NMR software have you used in the past?

A. VNMRJ, Spinworks, Sparky, NMRpipe, MestreC, xwinnmr, and MestreNova

Q. How do you rate iNMR?

A. In terms of NMR software, it is the best in terms of ease of use and power.

iNMR

It can process 1D, 2D, and 3D. Easy to use and powerful. It can read multiple formats and can convert files to ascii. The graphics are also very nice. No apparent bugs.

Topspin

It can process 1D, 2D, and 3D. Also powerful, but not very easy to use. I need my data converted to ascii for analysis with other programs and I could not find a way to do it with this software. It can only read Bruker formats.

Mestre-C

It can process 1D and 2D data. Powerful and easy to use, but a little buggy. For 2D, the conversion to ascii is not ideal.

NMRpipe

It can process 1D and 2D data. Not as powerful as the above programs and very clumsy to use. No easy way to convert data to ascii. It is also very slow.

MestreNova

It can process at least 1D and 2D. Powerful and easy to use, but slow, very slow. I found no easy way to convert my 2D spectra to ascii. Also, several useful features were removed from MestreC for this version.

VNMRJ

It can process 1D and 2D. Not as good as Topspin, but equally difficult. This software can not convert to ascii or read other file formats.

Spinworks

It can process 1D and 2D. It is fairly easy to use, but not as powerful as the software above. It is also quite buggy.

This is how I rate all the software and I tested a lot of NMR software:

iNMR > Topspin > Mestre-C > xwinnmr > MestreNova > Spinworks > Sparky > NMRpipe

Q. Is iNMR enough for your needs?

A. Yes, it does everything that I need including processing 3D data sets and it does it fast. It allows me to read files that I produced at the University of Washington on a Varian Unity Inova and the Bruker Avance III at the University of California San Diego. It allows me to convert files to ascii, so that I can do singular value decomposition of it with a scientific analysis program that we use. It produces publication-quality graphics. It is also very easy to use, so I don't need to spend a lot of time training graduate students or other postdocs on how to use it. I also didn't have to spend a lot of time learning it myself. I can't imagine a lab without it.

A. I am a project scientist at the School of Pharmacy at the University of California San Diego (UCSD). I was hired to bring some novel NMR technology that I developed at the University of Washington to UCSD.

I worked as a postdoctoral fellow at the Department of Medicinal Chemistry at the University of Washington and at Washington State University. I started my career as an EPR spectroscopist, where we built instruments. I have been doing NMR, since 2003.

Currently I study the process of drug metabolism, which happens to be the main road block for drug development. We hope that our research will lead to drugs of higher efficacy and fewer side effects. We are developing NMR technology that will allow us to rapidly determine drug bound structures and will speed drug development. We have developed a variety of NMR pulse programs for this purpose.

We do Paramagnetic protein NMR. We also do NMR simulations and write NMR pulse programs.

Q. Which NMR software are you using now?

A. Topspin 2.1 and iNMR 3.15.

Q. Which other NMR software have you used in the past?

A. VNMRJ, Spinworks, Sparky, NMRpipe, MestreC, xwinnmr, and MestreNova

Q. How do you rate iNMR?

A. In terms of NMR software, it is the best in terms of ease of use and power.

iNMR

It can process 1D, 2D, and 3D. Easy to use and powerful. It can read multiple formats and can convert files to ascii. The graphics are also very nice. No apparent bugs.

Topspin

It can process 1D, 2D, and 3D. Also powerful, but not very easy to use. I need my data converted to ascii for analysis with other programs and I could not find a way to do it with this software. It can only read Bruker formats.

Mestre-C

It can process 1D and 2D data. Powerful and easy to use, but a little buggy. For 2D, the conversion to ascii is not ideal.

NMRpipe

It can process 1D and 2D data. Not as powerful as the above programs and very clumsy to use. No easy way to convert data to ascii. It is also very slow.

MestreNova

It can process at least 1D and 2D. Powerful and easy to use, but slow, very slow. I found no easy way to convert my 2D spectra to ascii. Also, several useful features were removed from MestreC for this version.

VNMRJ

It can process 1D and 2D. Not as good as Topspin, but equally difficult. This software can not convert to ascii or read other file formats.

Spinworks

It can process 1D and 2D. It is fairly easy to use, but not as powerful as the software above. It is also quite buggy.

This is how I rate all the software and I tested a lot of NMR software:

iNMR > Topspin > Mestre-C > xwinnmr > MestreNova > Spinworks > Sparky > NMRpipe

Q. Is iNMR enough for your needs?

A. Yes, it does everything that I need including processing 3D data sets and it does it fast. It allows me to read files that I produced at the University of Washington on a Varian Unity Inova and the Bruker Avance III at the University of California San Diego. It allows me to convert files to ascii, so that I can do singular value decomposition of it with a scientific analysis program that we use. It produces publication-quality graphics. It is also very easy to use, so I don't need to spend a lot of time training graduate students or other postdocs on how to use it. I also didn't have to spend a lot of time learning it myself. I can't imagine a lab without it.

Stefano Antoniutti

Q. Please introduce yourself to the readers of the NMR software blog.

A. My position is Associate Professor in General and Inorganic Chemistry since year 2000 at the Dept. of Chemistry, Università di Venezia Ca' Foscari, Italy. I am responsible for Dept. NMR instrumentation and services since Year 1992. I entered the Department in 1983 as a researcher. Since 1983 I have my name in more than 80 scientific papers on ISI classified journals in the field.

My research area is in the field of Inorganic and Organometallic transition metal complexes. The research group I belong to is involved mainly in synthesis and characterization of new complexes.

NMR is the main way of characterization of our new compounds. I began using permanent magnet-pen plotter-1H CW instruments in the early '80s; moved to monodimensional FT NMR in the '80s; today use of multidimensional, multinuclear NMR (1H, 31P, 13C, 15N, 119Sn etc) is my daily routine.

Since 1995 in our Dept. we separated acquisition of spectra from spectral data elaboration, exporting NMR spectra from our Bruker AC instrument to our personal computers, mainly Macintosh machines.

Luckily, after the demise of Bruker from the Mac software area, we discovered the SwaN-MR package (free !!!) which became quickly the workhorse of our daily NMR duties. Today, our Mac users have switched to iNMR, the Wintel users to MNova.

Q. Which other NMR software have you used?

A. Apart from the built-in software of our instruments (Varian and Bruker NMRs) we began in the '90 with WINNMR, a Bruker software written for both PCs and Macs, in two versions. Some day, Buker decided not to develop any more the Mac version, concentrating their efforts only on the PC side. More or less in the same period I discovered SwaN-MR and Giuseppe Balacco, starting a brand new era for our work: you should remember that Bruker software was very expensive (any license was about €500, in those years, and used a hardware key!), and sadly far from complete, at least initially. For example, only 1H and 13C nuclei were supposed to be used; only after some request to the developers' team it was possible to obtain an improved version, really multinuclear!

In a sense, SwaN-MR (which I still use sometime today on a G5 machine) was a complete breakthrough, having a revolutionary impact on our work!

Giuseppe was very cooperative, so everytime some bug was evident, I obtained in a short time (from hours to minutes !) an improved and corrected version. He introduced the simulation routine on my request, and tailored it exactly as a chemist, in my opinion, needed it, not like a software engineer thinks a chemist should use it: a real dream. The same approach he maintained, and improved, when he wrote iNMR.

I find the latter a very high quality software, almost unbeatable for his price/performance ratio. Surely it can be that a more complete software package exists, but at least at ten or more times the price! (If I remember, current Bruker PC software is in the Thousands € range for a license, and only or PC or Linux boxes).

Q. Is iNMR enough for your needs?

A. As usual, you use only a small fraction of the opportunities offered by a software; even after having been host of Giuseppe for a couple of times in the last years, to make him to teach us how to use the program, I think that an usage of more than 20% of the opportunity it offers (and are continuously improved) is to be considered unrealistc. Everyday I discover something new, and with more or less the same frequency, new options are offered by new versions of the software, which Giuseppe considers really a commitment to do.

Anyway, iNMR never failed to offer me a solution to any need in my research work, and I always cite it in my papers, hoping to extend the number of its user. I evaluated other software, but none fulfilled my needs like iNMR (and, in the times of Mac OS 7/8/9, SwaN-MR). For instance, its baseline correction routine in bidimensional spectra is outstanding, far better in results than the Winnmr one, letting you extract correlations you would have missed otherwise.

A. My position is Associate Professor in General and Inorganic Chemistry since year 2000 at the Dept. of Chemistry, Università di Venezia Ca' Foscari, Italy. I am responsible for Dept. NMR instrumentation and services since Year 1992. I entered the Department in 1983 as a researcher. Since 1983 I have my name in more than 80 scientific papers on ISI classified journals in the field.

My research area is in the field of Inorganic and Organometallic transition metal complexes. The research group I belong to is involved mainly in synthesis and characterization of new complexes.

NMR is the main way of characterization of our new compounds. I began using permanent magnet-pen plotter-1H CW instruments in the early '80s; moved to monodimensional FT NMR in the '80s; today use of multidimensional, multinuclear NMR (1H, 31P, 13C, 15N, 119Sn etc) is my daily routine.

Since 1995 in our Dept. we separated acquisition of spectra from spectral data elaboration, exporting NMR spectra from our Bruker AC instrument to our personal computers, mainly Macintosh machines.

Luckily, after the demise of Bruker from the Mac software area, we discovered the SwaN-MR package (free !!!) which became quickly the workhorse of our daily NMR duties. Today, our Mac users have switched to iNMR, the Wintel users to MNova.

Q. Which other NMR software have you used?

A. Apart from the built-in software of our instruments (Varian and Bruker NMRs) we began in the '90 with WINNMR, a Bruker software written for both PCs and Macs, in two versions. Some day, Buker decided not to develop any more the Mac version, concentrating their efforts only on the PC side. More or less in the same period I discovered SwaN-MR and Giuseppe Balacco, starting a brand new era for our work: you should remember that Bruker software was very expensive (any license was about €500, in those years, and used a hardware key!), and sadly far from complete, at least initially. For example, only 1H and 13C nuclei were supposed to be used; only after some request to the developers' team it was possible to obtain an improved version, really multinuclear!

In a sense, SwaN-MR (which I still use sometime today on a G5 machine) was a complete breakthrough, having a revolutionary impact on our work!

Giuseppe was very cooperative, so everytime some bug was evident, I obtained in a short time (from hours to minutes !) an improved and corrected version. He introduced the simulation routine on my request, and tailored it exactly as a chemist, in my opinion, needed it, not like a software engineer thinks a chemist should use it: a real dream. The same approach he maintained, and improved, when he wrote iNMR.

I find the latter a very high quality software, almost unbeatable for his price/performance ratio. Surely it can be that a more complete software package exists, but at least at ten or more times the price! (If I remember, current Bruker PC software is in the Thousands € range for a license, and only or PC or Linux boxes).

Q. Is iNMR enough for your needs?

A. As usual, you use only a small fraction of the opportunities offered by a software; even after having been host of Giuseppe for a couple of times in the last years, to make him to teach us how to use the program, I think that an usage of more than 20% of the opportunity it offers (and are continuously improved) is to be considered unrealistc. Everyday I discover something new, and with more or less the same frequency, new options are offered by new versions of the software, which Giuseppe considers really a commitment to do.

Anyway, iNMR never failed to offer me a solution to any need in my research work, and I always cite it in my papers, hoping to extend the number of its user. I evaluated other software, but none fulfilled my needs like iNMR (and, in the times of Mac OS 7/8/9, SwaN-MR). For instance, its baseline correction routine in bidimensional spectra is outstanding, far better in results than the Winnmr one, letting you extract correlations you would have missed otherwise.

Sunday, 31 May 2009

Testing the Razors

As I wrote initially, I like the razors because they are easy to learn and use.

I have simulated two molecules only, because they are the standard (minimal) test I perform on simulation software. I come from the old school, where simulation means generating a plot from chemical shift values (while the razors mainly estimate the chemical shifts from the structure).

The first test is N,N-dimethylformamide.

The second test is ortho-dichloro-benzene.

Simulated by iNMR:

Simulated by HNMRazor:

I have simulated two molecules only, because they are the standard (minimal) test I perform on simulation software. I come from the old school, where simulation means generating a plot from chemical shift values (while the razors mainly estimate the chemical shifts from the structure).

The first test is N,N-dimethylformamide.

1-H

exp. calc. diff.

8.019 1.609 -6.41

2.970 3.001 0.031

2.883 3.001 0.118

13-C

exp. calc. diff.

162.6 198.7 36.1

36.4 34.568 -1.9

31.3 34.568 3.3

The second test is ortho-dichloro-benzene.

Simulated by iNMR:

Simulated by HNMRazor:

Interview with Kevin Theisen

Kevin already told his story on the offcial website of iChemLabs. I was curious to know more details... Here is the first offical interview of my blog.

OS: How much accurate are the predictions of the NMRazors?

KT: The NMRazors are fairly accurate for most molecules. They will handle any molecule encountered in an undergraduate organic chemistry course. The NMRazors will be less accurate for molecules where complex anisotropic and 3D effects are present. I used several published references when developing the algorithms in the NMRazors and they are cited on the NMRazor website.

OS: Programming is similar to chemical synthesis: there are starting materials and finished products. What were the staring material for the NMRazors?

KT: When I first began programming chemistry applications, I started with a graph based depth-first search traversal of a database of reactions in order to optimize synthetic routes. A credible synthetic database was too expensive for me to obtain as an undergraduate, so I moved on to other applications in chemistry. I quickly discovered that the graph data structure is really integral to computational chemistry, as most chemical entities are efficiently modeled with them, especially structures. I was and still am a huge fan of spectroscopy, so I began to work on algorithms to traverse molecules and find functional groups for nuclear magnetic resonance simulations. It was originally a text based application, and I remember showing some of my favorite professors connection table inputs with ppm table outputs. It was very unattractive, so I taught myself Swing and the NMRazor GUI was created.

OS: Why do you prefer Java? Because of the language itself, the available frameworks, the platform independence or any other reason?

KT: Java is a wonderful programming language for several reasons. Mainly, it's object oriented and the graphical capabilities available with Java Swing are really unparalleled in other languages. The other reason was that I used a Mac, my friends usually used PCs and a few had Linux, so I needed a programming language that I could use on Mac and then deploy on other operating systems. Java was really the only choice for me at the time, given my minimal experience. The only downside to Java is that it is interpreted, so it may be slower if the program is carelessly written, and the JREs on different operating systems are not always consistent, so I still need to test on all three systems before I am sure a program actually works.

OS: How much work was required? What was the most difficult part: the algorithm or the interface?