Requires Mac OS 10.3, is Universal binary, reads Varian, Bruker, Jeol, JCAMP-DX, Tecmag, SwaN-MR, Siemens, GE medical, Spinsight files; can process, fit and simulate; creates pictures and PDF files; price: 30 euro (includes unlimited updates).

Friday, 15 December 2006

iNMR reader

Requires Mac OS 10.3, is Universal binary, reads Varian, Bruker, Jeol, JCAMP-DX, Tecmag, SwaN-MR, Siemens, GE medical, Spinsight files; can process, fit and simulate; creates pictures and PDF files; price: 30 euro (includes unlimited updates).

Thursday, 14 December 2006

Freeware

A well written list of NMR freeware can be found at:

http://www.pharmacy.umaryland.edu/PSC/NMR/proc_anal/tecmag.html

It's 3 years old, which is, unfortunately, too much for such a rapidly evolving field. Apart from this inconvenient, it's nearly perfect. The only (minor) defect is that the list contains more than one entry per operative system. My ideal list would just contain a unique recommendation per OS.

The questions is: why there is so much NMR freeware around, that all web lists are rapidly outdated? It is well proven that the authors of freeware never become famous, and I mean any kind of freeware, I don't mean the NMR niche. If NMR freeware is never celebrated, and if the author of any free program is never remembered, the probability of becoming famous writing it are zero. Only one word can explain why people writes it: money. They write it for the money and they stop writing it when they don't receive money anymore. This has been my case and the case of all the NMR freeware I know about. I used to receive my regular salary when writing freeware and renounced to it to write commercial software.

Unfortunately, for the users, the day arrived when the sponsors realized that this kind of software brings no fame. The only hope is to become the standard of the field. NMRPipe went near to that status, yet was limited in platforms (only UNIX) and in applications (mainly 2D). All that the sponsor can get is a publication, written by the author himself. In some cases they may add a poster or a follow-up paper, and by that time they realize they are scraping the bottom of the barrel. No external author ever cares to write a review. Even youtube movies get their reviews, but NMR softwares don't. Vomiting as it can be, this is the reality. When the sponsor realizes that even the stinkiest movie gets more attention than the best-crafted NMR program, that's the moment when the flow of money stops. And when the money arrives no more the programmer (being him no saint) quits.

I don't mean that it would have been different, but it certainly wouldn't have hurt, if the beneficiaries had cited more frequently their softwares, reviewd them, evangelized them. The beneficiaries of freeware also receive their salaries and their grants, therefore their choice was not dictated by economical motivations. If they had really loved the free software they'd a least tried to save it. They didn't love and didn't care. They decided for the death of NMR freeware and, if it was not for the residual stupidity of the sponsors, they'd had almost fulfilled their intent.

How a software dies? It is constantly fighting on four fronts:

The lethal bullet can arrive from any of these sources, but the first cause alone would suffice. NMR is so vast a field that all programs have at least one hole: they never find the time to cover the whole gamma.

At the risk of being liable, today I uploaded the definitive version of SwaN-MR at http://www.inmr.net/index.html#DOWN. The sponsor forgot its existence. I wrote it but it's not mine. If you are so poor that you couldn't buy a new Mac in the last four years, chances are that SwaN-MR will run on your old machine. If you are so lucky to own a vintage 68K Mac (mine died), look somewhere else.

http://www.pharmacy.umaryland.edu/PSC/NMR/proc_anal/tecmag.html

It's 3 years old, which is, unfortunately, too much for such a rapidly evolving field. Apart from this inconvenient, it's nearly perfect. The only (minor) defect is that the list contains more than one entry per operative system. My ideal list would just contain a unique recommendation per OS.

The questions is: why there is so much NMR freeware around, that all web lists are rapidly outdated? It is well proven that the authors of freeware never become famous, and I mean any kind of freeware, I don't mean the NMR niche. If NMR freeware is never celebrated, and if the author of any free program is never remembered, the probability of becoming famous writing it are zero. Only one word can explain why people writes it: money. They write it for the money and they stop writing it when they don't receive money anymore. This has been my case and the case of all the NMR freeware I know about. I used to receive my regular salary when writing freeware and renounced to it to write commercial software.

Unfortunately, for the users, the day arrived when the sponsors realized that this kind of software brings no fame. The only hope is to become the standard of the field. NMRPipe went near to that status, yet was limited in platforms (only UNIX) and in applications (mainly 2D). All that the sponsor can get is a publication, written by the author himself. In some cases they may add a poster or a follow-up paper, and by that time they realize they are scraping the bottom of the barrel. No external author ever cares to write a review. Even youtube movies get their reviews, but NMR softwares don't. Vomiting as it can be, this is the reality. When the sponsor realizes that even the stinkiest movie gets more attention than the best-crafted NMR program, that's the moment when the flow of money stops. And when the money arrives no more the programmer (being him no saint) quits.

I don't mean that it would have been different, but it certainly wouldn't have hurt, if the beneficiaries had cited more frequently their softwares, reviewd them, evangelized them. The beneficiaries of freeware also receive their salaries and their grants, therefore their choice was not dictated by economical motivations. If they had really loved the free software they'd a least tried to save it. They didn't love and didn't care. They decided for the death of NMR freeware and, if it was not for the residual stupidity of the sponsors, they'd had almost fulfilled their intent.

How a software dies? It is constantly fighting on four fronts:

- advancements in NMR

- changes in file formats

- changes in the operative systems, processors, compilers, libraries, etc...

- competition from other NMR programs

The lethal bullet can arrive from any of these sources, but the first cause alone would suffice. NMR is so vast a field that all programs have at least one hole: they never find the time to cover the whole gamma.

At the risk of being liable, today I uploaded the definitive version of SwaN-MR at http://www.inmr.net/index.html#DOWN. The sponsor forgot its existence. I wrote it but it's not mine. If you are so poor that you couldn't buy a new Mac in the last four years, chances are that SwaN-MR will run on your old machine. If you are so lucky to own a vintage 68K Mac (mine died), look somewhere else.

Tuesday, 12 December 2006

XWin-NMR

For the most part of its life-cycle, XWin-NMR did contain a huge hole and nobody used to complain. I wonder if Bruker is a cult more than a instruments-maker. Whatever Bruker does is forgiven by its loyal customers. In the case I am writing about, you had a value of temperature stored in your file that had nothing to do with the actual temperature at which the spectrum was acquired. For example: the probe was at 240 K and the software reported the value 298 K. The reason was obvious, almost natural: the temperature could be controlled by Win-NMR, but in practice you were (and still are) using another program, called "edte", which operates directly on the thermal unit and can't be aware of the active data-set, or of the existence of a spectrometer, for that matter. With edte you can set and monitor the temperature of the probe, but the value is not saved in the spectrum. (The latter belonging to XWin-NMR, not to edte).

It was unbelievable that at the same time Bruker could claim to care about GLPs. The situation went on, in this fashion, for many years. The last generation of XWin-NMR solved the problem: when the acquisition is completed, the software reads the temperature of the probe and stores this value into the spectrum.

This anecdote tells why old Bruker users admire the successor TopSpin. The cause of their happiness is the end of their past sufferings.

It was unbelievable that at the same time Bruker could claim to care about GLPs. The situation went on, in this fashion, for many years. The last generation of XWin-NMR solved the problem: when the acquisition is completed, the software reads the temperature of the probe and stores this value into the spectrum.

This anecdote tells why old Bruker users admire the successor TopSpin. The cause of their happiness is the end of their past sufferings.

Monday, 11 December 2006

Nuts

I ignore the history of Acorn and generally don't like to express my thoughts about people. Unfortunately I assigned to myself the task of defeating the generalized laziness of the web and now I am forced to describe Acorn's main product, called NUTS (NMR Utility Transform Software). The bad thing is not NUTS, but the laziness of the web. You can find countless lists of NMR software whose only effect is a great loss of time for all their readers. Most of the programs you can find have serious limitations and can be considered unusable. The compilers of the list never find the time to download, test the programs, write a review and discourage you from doing the same.

This particular case (NUTS) is a borderline one. The program is worth to see because is a living fossil, just like its nice vintage icon suggests. Using NUTS can be as funny as visiting a museum. The web site is informative, readable, moderately vintage (by today's web standards) and certainly a valuable source of information. I appreciate a lot the simplicity of the pricing policy, its transparency, the immediate availability of a downloadable demo, etc.. a lot of things that I am in tune with.

There is nothing wrong in writing a program in an ancient DOS-like style. It's the right answer to the hundreds of icons of Topspin (there are no icons into Nuts). I am seriously concerned, however, at the mere idea that in 2006 a chemist could invest money in buying such a fossil. The user can only open a single window with a single pane and two spectra cannot remain open simultaneously. There is something more unusual, though. In a single word: this program is modal, if you know what it means. Before testing Nuts I believed that all Bruker software was modal. Today I must say that Bruker software is 40% modal, while NUTS is pure modal. It's so modal that the manual doesn't even mention the existence of modes and calls them "subroutines". There are 9 of them, plus the starting mode, called "base level". In simpler words: there is a total of 10 different interfaces (just like ten different programs) and the user is expected to learn them all. I wonder how somebody can remember where the commands are because, even when the menus bear familiar names like "Edit" and "View", the menu items are highly non-standard. It's not a matter of opinion, is a matter of design. I mean: if you are writing a program for the Macintosh, like Acorn is doing, you should adhere to clearly stated principles and practices. The principle is that half of your commands should be the familiar "Copy", "Paste", "Undo"... in their familiar menu positions.

The good thing is that you can just ignore the menus. All the NUTS command can and should be written in the command line. Menus have an aesthetic function. In the basic level you find six equivalent menu commands: "Processing Parameters", "Set LB", "Apply EM", "Apply GM", "Set Sine Phase", "Apply Sine Multiply". They all open the same dialog window!

A noteworthy property of most menu items is that they are not context sensitive. They are never dimmed, even when there is no spectrum "on stage". In practice, half of the commands, if given at the start of the program, crash the program itself! It's also funny to see that they make no distinction of FID or spectrum. Knowing "what's on" is 100% responsibility of the user. The sine-bell multiplication, for example, that is intended for FIDs, can easily be applied to transformed spectra, even if I can't find no good reason for such an operation.

All the above things are today considered "non-professional", yet they represent surmountable defects. The average user can discriminate by himself if he is working on a FID or on a spectrum and he will soon learn to do without menus and use the command line exclusively. Now you also know why there is no "Undo" command: the program crashes as soon as you make a mistake, nobody had ever the opportunity of using "Undo" and it was eventually removed. Using the same logic, however, most of the remaining menu items could have removed: For example, the first 6 items of the "Process" menu, plus the six other items already cited, could all have been put into a single dialog.

In my exploration I haven't found the commands to enhance/decrease the apparent intensity of the spectrum. It may be a limitation of the demo version, but at this point I am full of doubts and believe in nothing. Is NUTS actually "usable"? The web site says that they are developing the Mac version because they received specific requests in that direction. How is it possible that somebody who loves the Mac can order a program driven by the command line? How is it possible that they sell NUTS 1D at 499 USD and the fully fledged Mestre-C sells at 265 euro only?

This review refers to wxNuts version 0.6.0, compiled on October 19, 2006.

This particular case (NUTS) is a borderline one. The program is worth to see because is a living fossil, just like its nice vintage icon suggests. Using NUTS can be as funny as visiting a museum. The web site is informative, readable, moderately vintage (by today's web standards) and certainly a valuable source of information. I appreciate a lot the simplicity of the pricing policy, its transparency, the immediate availability of a downloadable demo, etc.. a lot of things that I am in tune with.

There is nothing wrong in writing a program in an ancient DOS-like style. It's the right answer to the hundreds of icons of Topspin (there are no icons into Nuts). I am seriously concerned, however, at the mere idea that in 2006 a chemist could invest money in buying such a fossil. The user can only open a single window with a single pane and two spectra cannot remain open simultaneously. There is something more unusual, though. In a single word: this program is modal, if you know what it means. Before testing Nuts I believed that all Bruker software was modal. Today I must say that Bruker software is 40% modal, while NUTS is pure modal. It's so modal that the manual doesn't even mention the existence of modes and calls them "subroutines". There are 9 of them, plus the starting mode, called "base level". In simpler words: there is a total of 10 different interfaces (just like ten different programs) and the user is expected to learn them all. I wonder how somebody can remember where the commands are because, even when the menus bear familiar names like "Edit" and "View", the menu items are highly non-standard. It's not a matter of opinion, is a matter of design. I mean: if you are writing a program for the Macintosh, like Acorn is doing, you should adhere to clearly stated principles and practices. The principle is that half of your commands should be the familiar "Copy", "Paste", "Undo"... in their familiar menu positions.

The good thing is that you can just ignore the menus. All the NUTS command can and should be written in the command line. Menus have an aesthetic function. In the basic level you find six equivalent menu commands: "Processing Parameters", "Set LB", "Apply EM", "Apply GM", "Set Sine Phase", "Apply Sine Multiply". They all open the same dialog window!

A noteworthy property of most menu items is that they are not context sensitive. They are never dimmed, even when there is no spectrum "on stage". In practice, half of the commands, if given at the start of the program, crash the program itself! It's also funny to see that they make no distinction of FID or spectrum. Knowing "what's on" is 100% responsibility of the user. The sine-bell multiplication, for example, that is intended for FIDs, can easily be applied to transformed spectra, even if I can't find no good reason for such an operation.

All the above things are today considered "non-professional", yet they represent surmountable defects. The average user can discriminate by himself if he is working on a FID or on a spectrum and he will soon learn to do without menus and use the command line exclusively. Now you also know why there is no "Undo" command: the program crashes as soon as you make a mistake, nobody had ever the opportunity of using "Undo" and it was eventually removed. Using the same logic, however, most of the remaining menu items could have removed: For example, the first 6 items of the "Process" menu, plus the six other items already cited, could all have been put into a single dialog.

In my exploration I haven't found the commands to enhance/decrease the apparent intensity of the spectrum. It may be a limitation of the demo version, but at this point I am full of doubts and believe in nothing. Is NUTS actually "usable"? The web site says that they are developing the Mac version because they received specific requests in that direction. How is it possible that somebody who loves the Mac can order a program driven by the command line? How is it possible that they sell NUTS 1D at 499 USD and the fully fledged Mestre-C sells at 265 euro only?

This review refers to wxNuts version 0.6.0, compiled on October 19, 2006.

Topspin NMR free download

Did you know there is a long list of NMR programs that can be freely downloaded from the internet and work with your Bruker files? Check them out!

iNMR reader

Spinworks

MatNMR

NPNMR

NPK

CCPN

Other free programs are available upon request (with some restrictions):

Jeol Delta

NMRPipe

NMRnotebook

ACD

Nuts

iNMR reader

Spinworks

MatNMR

NPNMR

NPK

CCPN

Other free programs are available upon request (with some restrictions):

Jeol Delta

NMRPipe

NMRnotebook

ACD

Nuts

Saturday, 9 December 2006

NMR googling

Building your personal NMR database couldn't be simpler. All you have to do is to process your spectra as always. They are automatically indexed by Spotlight and can be searched either with Spotlight or with Speclight (free).

It has always been possible, on spectrometer of any brand, to store a "title" that describes the sample and the experiment. iNMR automatically imports that text and feeds Spotlight with it and with other notes you insert with the acclaimed iNMR annotation tools. To increase your searching options you can also:

- Include the elemental formula of your compounds (and of your impurities too!). If you include this information you can perform searches based on the molecular weight, the number of carbon atoms, etc...

- Include the SMILES strings of your compounds (it's a simple matter of Copying & Pasting). If you include this information you can perform searches based on molecular fragments.

- Perform peak-picking. If you are not used to it, start today. Even if you hide the output, you'll be able to find peaks wherever they are, simply typing a range of chemical shifts. Very handy to recognize impurities!

All your spectra will be indexed, old and new ones, after you install iNMR 1.6. You can also use Speclight to retrieve chemical files (indexed by ChemSpotlight).

Sunday, 3 December 2006

Speclight

Speclight is a free application, with apparently no precedent, that retrieves NMR spectra and other documents in the file system (even on a network). Requires Mac OS X Tiger and, possibly, iNMR 1.6. When I say "other documents" I mean those indexed by ChemSpotlight. Both the cost and the effort of building a personal NMR database are suddenly reduced to zero. Speclight will debut next week; source code available upon request.

Tuesday, 28 November 2006

I did it!

Friday, 24 November 2006

Pepper

Mac users are usually proud of their machines and they should be even more so. Their operative system has an hidden engine running, very quietly, and putting everything into a catalog. It's called Spotlight and it's not secret at all, because every Tiger owner has used it at least once. In practice it's a substitute for the human memory. You type a word like "pepper" and it searches your computer for everything related to it: recipes, pictures, mails, pdf documents and, last but not least, the music of the late great Art Pepper. It works like Google but it's even faster: the first results appear before you type the final "r" in pepper! This engine can, potentially, search anything. You can, for example ask: "Search on my computer all carbon spectra containing a peak between 90 and 95 ppm" and can also restrict the search to a specific magnetic field, or solvent, or year. Potentially you can also search for all molecules containing a given substructure. The great thing is that it all comes with no effort. It's not like creating and building a database. The system does everything automatically every time a file is copied, changed or deleted. And it's also free. Do you want to learn how to do it?

You understand that I will be busy in the next few weeks...

- The first component is the the terminal command "mdfind". The simplest example of usage is: "mdfind pepper", which is equivalent to typing "pepper" into the Spotlight search field. Substitute a more articolate query expression for "pepper" and you can modulate the search anyway you like.

- A Spotlight plug-in that can parse NMR documents. The forthcoming version of iNMR (1.6) comes with a bundled plugin. A plugin can also be a stand-alone bundle. It is possible to create plugins for every kind of documents, generated by any application. For example you can create a plugin for Varian spectra, copy all your back-ups on the hard disc, and have all them indexed.

- Possibly a graphic interface that hides the complexity of the terminal to the average Mac user. Just like the plug-in, it can be written by any volunteer, it must not necessarily reside inside an NMR application. I am also going to write this small freeware application, which should appear in 2006, and should later be integrated into iNMR.

- The complete integration into iNMR represents the perfect solution. Whenever you find an unexpected and unknown peak into a spectrum, you'll directly ask the computer: "Where else have you seen such a thing?", and it will open, on the spot, all the old spectra containing a peak at the same position.

You understand that I will be busy in the next few weeks...

Wednesday, 22 November 2006

IUPAC

When we report the chemical shift of a peak, we can use two units, according to IUPAC. The most recent recommendation I have found is:

Pure Appl.Chem., Vol.73, No.11, pp.1795–1818, 2001.

©2001 IUPAC

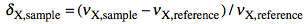

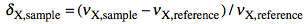

which defines the δ as:

and the Ξ as:

The latter term is the measured 1H frequency of TMS (diluted chloroform solution). The δ scale is limited to a single nuclide, while the Ξ scale is a unified scale valid for all nuclei. The unified scale simplifies the experimental practice for the exotic nuclei, but generates unusual figures with 6 decimal digits. I have always worked with hydrogen and carbon and have never found a chemical shift reported in Ξ units. I am perplexed because IUPAC says:

IUPAC recommends that a unified chemical shift scale for all nuclides be based on the proton resonance of TMS as the primary reference.

and also

In the future, reporting of chemical shift data as Ξ values may become more common and acceptable.

Consider that the above formula for Ξ is only apparently simple: the input values are not normally found inside NMR spectral files. Even if they are, the chemist is not used to calculate the chemical shift: he reads it. To really switch to the unified scale it would be necessary that:

What's really good about the cited article is that it includes all the information on the subject, and we don't have to consult older recommendations (the contrary happened with the definition of JCAMP-DX for NMR, unfortunately). In the following part of this post I will discuss the old δ unit exclusively. Citing again the IUPAC article:

Unfortunately, older software supplied by manufacturers to convert from frequency units to ppm in FT NMR sometimes uses the carrier frequency in the denominator instead of the true frequency of the reference, which can lead to significant errors.

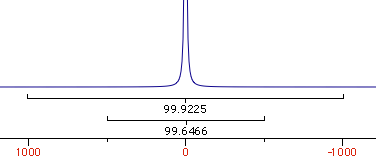

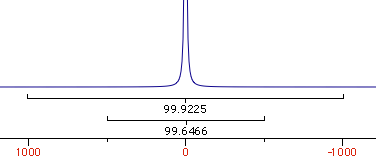

The carrier frequency is the frequency of the transmitter (the center of the spectral width). The reference frequency is the absolute frequency (in the laboratory frame) of the reference compound (TMS, just to materialize the idea). You know that TMS is usually at the far right of the spectrum, so there is a noticeable difference between the two frequencies. When the spectrum leaves the spectrometer, how can you tell which of the two values are exported with the file? I have opened a XWin-NMR file and found:

##$SFO1= 600.1324441176

##$SW= 8.26537126752216

##$SW_h= 4960.31746031746

You can verify that SFO1 = SW_h / SW. _Assuming_ that XWin-NMR follows the IUPAC convention, SFO1 is the frequency of TMS. I don't know what happens with other programs. And I don't know what happens when the user changes the scale reference. According to the rules, if he shifts the scale of even a minimal quantity, let's say 0.001 ppm, the last digits of SFO1 should change.

I know for sure that it is not so with iNMR, which is wrong. iNMR accepts the frequency value found into the original file and keeps it constant (unless the user changes it explicitly). It is possible to recalculate the reference value every time that the TMS position is redefined, but it's almost a paradox. If the users says that the scale is not correct, then other parameters may also be wrong. In order to follow the rule, the program must perform a calculation based on dubious values. If I don't follow the rule, in the common case that the scale is shifted by 0.1 ppm or less, I know to make an error in the order of 10-7. In practice an error of less than 10-4 can be tolerated. Today I prefer to introduce this minimal error, instead of altering the value for the spectrometer frequency. The user is free, however, to set both the value for the spectrometer frequency and the TMS position. Tomorrow, if I change my mind, I can make the process automatic.

Pure Appl.Chem., Vol.73, No.11, pp.1795–1818, 2001.

©2001 IUPAC

which defines the δ as:

and the Ξ as:

The latter term is the measured 1H frequency of TMS (diluted chloroform solution). The δ scale is limited to a single nuclide, while the Ξ scale is a unified scale valid for all nuclei. The unified scale simplifies the experimental practice for the exotic nuclei, but generates unusual figures with 6 decimal digits. I have always worked with hydrogen and carbon and have never found a chemical shift reported in Ξ units. I am perplexed because IUPAC says:

IUPAC recommends that a unified chemical shift scale for all nuclides be based on the proton resonance of TMS as the primary reference.

and also

In the future, reporting of chemical shift data as Ξ values may become more common and acceptable.

Consider that the above formula for Ξ is only apparently simple: the input values are not normally found inside NMR spectral files. Even if they are, the chemist is not used to calculate the chemical shift: he reads it. To really switch to the unified scale it would be necessary that:

- All journals require the chemical shift expressed in Ξ units.

- All spectrometers have their software updated, so the scale can be optionally be expressed in the new unit (who's going to pay?).

- The absolute frequency of 1H of TMS, measured when installing the instrument, be saved into every file.

- Some standard rule governs the previous point, so all softwares can read spectra from all instruments.

What's really good about the cited article is that it includes all the information on the subject, and we don't have to consult older recommendations (the contrary happened with the definition of JCAMP-DX for NMR, unfortunately). In the following part of this post I will discuss the old δ unit exclusively. Citing again the IUPAC article:

Unfortunately, older software supplied by manufacturers to convert from frequency units to ppm in FT NMR sometimes uses the carrier frequency in the denominator instead of the true frequency of the reference, which can lead to significant errors.

The carrier frequency is the frequency of the transmitter (the center of the spectral width). The reference frequency is the absolute frequency (in the laboratory frame) of the reference compound (TMS, just to materialize the idea). You know that TMS is usually at the far right of the spectrum, so there is a noticeable difference between the two frequencies. When the spectrum leaves the spectrometer, how can you tell which of the two values are exported with the file? I have opened a XWin-NMR file and found:

##$SFO1= 600.1324441176

##$SW= 8.26537126752216

##$SW_h= 4960.31746031746

You can verify that SFO1 = SW_h / SW. _Assuming_ that XWin-NMR follows the IUPAC convention, SFO1 is the frequency of TMS. I don't know what happens with other programs. And I don't know what happens when the user changes the scale reference. According to the rules, if he shifts the scale of even a minimal quantity, let's say 0.001 ppm, the last digits of SFO1 should change.

I know for sure that it is not so with iNMR, which is wrong. iNMR accepts the frequency value found into the original file and keeps it constant (unless the user changes it explicitly). It is possible to recalculate the reference value every time that the TMS position is redefined, but it's almost a paradox. If the users says that the scale is not correct, then other parameters may also be wrong. In order to follow the rule, the program must perform a calculation based on dubious values. If I don't follow the rule, in the common case that the scale is shifted by 0.1 ppm or less, I know to make an error in the order of 10-7. In practice an error of less than 10-4 can be tolerated. Today I prefer to introduce this minimal error, instead of altering the value for the spectrometer frequency. The user is free, however, to set both the value for the spectrometer frequency and the TMS position. Tomorrow, if I change my mind, I can make the process automatic.

Tuesday, 21 November 2006

Mistakes

Apparently a program to process NMR spectra is like any other computer program: eventually they consume ink and paper and, if the user is happy with the printout, he quits the program. The fundamental difference, in the NMR case, is that the user is forced to accept the result even if it slightly wrong. If, for example, there is a very small error in the calculation of integrals, or the spectrum and the scale are misaligned by 1 mm, or the relaxation time has not been correctly estimated, how can the user recognize the mistake? Comparing the results of two different programs is easy, but time-consuming, and not everybody has two programs to compare. This is also what I do as a programmer. Sometimes, when I can't find a second program, I write two different algorithms and test them against each other. Some subtle differences are really difficult to discern; I remember a couple of cases in which it took months. Version 1 of SwaN-MR (the version that nobody used) calculated wrong integrals. The mistake became, however, evident as soon as the program was used in practice.

Version 0.1 of iNMR drew 1D spectra shifted by 1 spectral point (in many cases less than 0.1%). When the scale was calibrated (against the... shifted spectrum), the error was perfectly compensated, because it was constant. There was no way to notice or demonstrate the bug, the spectrum and the scale were perfectly aligned, until I wrote the peak-picking function. The output of peak-picking was constantly shifted by 1 point and in this way the bug was revealed. In the case it was a benign bug with no consequence. My experience is that there is no reason to trust a programmer. The user should find the time to personally test the software, at least those parts that are essential for his work. Remember that they come with no warranty (how could it be different?).

Assuming that the NMR software is perfect, the printed output can still be misleading. Is it possible to tell by inspection if the processing has been performed correctly? The first thing that I observe is the shape of the peaks. It tells if the sample has not been accurately shimmed and if the user relied exclusively on automatic phase correction or, instead, spent the canonical minute in manual phase correction. These things are not as important as baseline correction or accurate referencing against TMS, and have no relation with those other fundamental steps, but are diagnostic hints about the experience and the patience of the user. Including the TMS peak (or a solvent peak) into the peak-picking can be useful to demonstrate that the scale has been correctly referenced. Including the graphical integrals or, better, integrating pieces of pure baseline, can show how flat the latter is. These expedients are not enough, however: small deviations of the integral from zero are not graphically evident and a single baseline sample is only a partial demonstration. The TMS position deserves another article, I hope to write it soon.

Unfortunately the above expedients are not elegant and reduce the clarity and readability of the printed spectrum. What happens, however, if you discover, from the printout, that the processing is inaccurate? When you discover a grammar error in the draft of an article you can correct it and print again. Can you do the same, with the spectrum, in the absence of the raw data? Even if you have an accurate log file of all processing operations, what's it for, if you are not allowed to reprocess the spectrum?

The printed spectrum cannot be replaced because all magnetic and optical supports have been a failure (if their purpose was to save the information for posterity). The CD seems to be more durable than magnetic supports, but it is already being replaced by the DVD and, most of all, we don't have the proof that our CDs will still be readable 20 years from now. Store the spectra on paper, but inspect them on screen, where it is possible to check the quality of processing. The point is that most of casual users lack the basic know-how to judge the quality of a spectrum. The apparent complexity of 2D spectroscopy effectively stops inexperienced users, but in the more familiar 1D environment they feel free to process spectra as they like. Processing 2D spectra is like using a word-processor: the effect of a mistake is apparent even to the uninitiated. The common mistake, in 1D spectroscopy, is to forget that it's a branch of science and that it is to be approached with a minimal knowledge of the field. I admit the sacrosanct right of the user to ignore the manual, but he must already know the steps of routine processing, even when the processing is completely automatic. If he knows how NMR processing works, he can learn the program by trial and error. It's not his/her fault if manuals are boring. My personal advise: read them!

Version 0.1 of iNMR drew 1D spectra shifted by 1 spectral point (in many cases less than 0.1%). When the scale was calibrated (against the... shifted spectrum), the error was perfectly compensated, because it was constant. There was no way to notice or demonstrate the bug, the spectrum and the scale were perfectly aligned, until I wrote the peak-picking function. The output of peak-picking was constantly shifted by 1 point and in this way the bug was revealed. In the case it was a benign bug with no consequence. My experience is that there is no reason to trust a programmer. The user should find the time to personally test the software, at least those parts that are essential for his work. Remember that they come with no warranty (how could it be different?).

Assuming that the NMR software is perfect, the printed output can still be misleading. Is it possible to tell by inspection if the processing has been performed correctly? The first thing that I observe is the shape of the peaks. It tells if the sample has not been accurately shimmed and if the user relied exclusively on automatic phase correction or, instead, spent the canonical minute in manual phase correction. These things are not as important as baseline correction or accurate referencing against TMS, and have no relation with those other fundamental steps, but are diagnostic hints about the experience and the patience of the user. Including the TMS peak (or a solvent peak) into the peak-picking can be useful to demonstrate that the scale has been correctly referenced. Including the graphical integrals or, better, integrating pieces of pure baseline, can show how flat the latter is. These expedients are not enough, however: small deviations of the integral from zero are not graphically evident and a single baseline sample is only a partial demonstration. The TMS position deserves another article, I hope to write it soon.

Unfortunately the above expedients are not elegant and reduce the clarity and readability of the printed spectrum. What happens, however, if you discover, from the printout, that the processing is inaccurate? When you discover a grammar error in the draft of an article you can correct it and print again. Can you do the same, with the spectrum, in the absence of the raw data? Even if you have an accurate log file of all processing operations, what's it for, if you are not allowed to reprocess the spectrum?

The printed spectrum cannot be replaced because all magnetic and optical supports have been a failure (if their purpose was to save the information for posterity). The CD seems to be more durable than magnetic supports, but it is already being replaced by the DVD and, most of all, we don't have the proof that our CDs will still be readable 20 years from now. Store the spectra on paper, but inspect them on screen, where it is possible to check the quality of processing. The point is that most of casual users lack the basic know-how to judge the quality of a spectrum. The apparent complexity of 2D spectroscopy effectively stops inexperienced users, but in the more familiar 1D environment they feel free to process spectra as they like. Processing 2D spectra is like using a word-processor: the effect of a mistake is apparent even to the uninitiated. The common mistake, in 1D spectroscopy, is to forget that it's a branch of science and that it is to be approached with a minimal knowledge of the field. I admit the sacrosanct right of the user to ignore the manual, but he must already know the steps of routine processing, even when the processing is completely automatic. If he knows how NMR processing works, he can learn the program by trial and error. It's not his/her fault if manuals are boring. My personal advise: read them!

Monday, 20 November 2006

Sunday, 19 November 2006

Self-Promotion

Today iNMR is a Universal Binary application.

This is the final stage of a long endeavor. I have added to iNMR all the features asked by hundreds of users of all disciplines and the program is still extremely compact, elegant and immediate. It integrates perfectly with Mac OS X, also because it's the only NMR program which adheres to the Apple guidelines. Now that Apple is selling millions of computers, other software houses are hastily porting their products from Windows, but they can never achieve the same level of integration. Even admitting that these other programs will be ported, they will still look like strangers into Mac OS X.

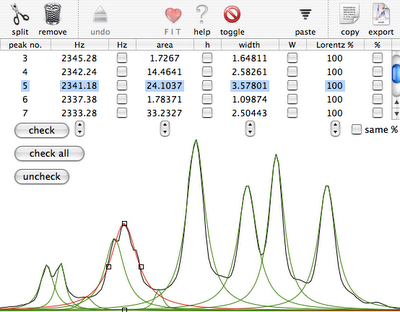

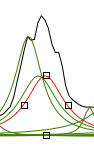

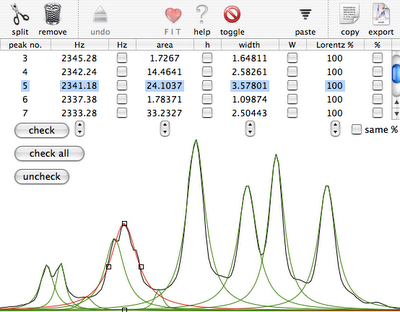

iNMR is so vast that can be compared to a suite of programs: a processor, an annotator, two modules to simulate both static and dynamic NMR spectra, a versatile line-fitting module, a host of importing filters and another host of exporting functions. To control all these operations you don't have to navigate through long menus or countless palettes, nor to memorize the command names. You have certainly appreciated the clear and simple interface of iNMR.

Despite the wealth of features, most users have never reported a single bug. The few reported errors have been swiftly corrected and a new version has appeared within 24 hours from their discovery. This is certainly the most important thing you wanted to know.

You are kindly invited to download and try the latest version 1.5.6 and to visit the site www.inmr.net for further news.

This is the final stage of a long endeavor. I have added to iNMR all the features asked by hundreds of users of all disciplines and the program is still extremely compact, elegant and immediate. It integrates perfectly with Mac OS X, also because it's the only NMR program which adheres to the Apple guidelines. Now that Apple is selling millions of computers, other software houses are hastily porting their products from Windows, but they can never achieve the same level of integration. Even admitting that these other programs will be ported, they will still look like strangers into Mac OS X.

iNMR is so vast that can be compared to a suite of programs: a processor, an annotator, two modules to simulate both static and dynamic NMR spectra, a versatile line-fitting module, a host of importing filters and another host of exporting functions. To control all these operations you don't have to navigate through long menus or countless palettes, nor to memorize the command names. You have certainly appreciated the clear and simple interface of iNMR.

Despite the wealth of features, most users have never reported a single bug. The few reported errors have been swiftly corrected and a new version has appeared within 24 hours from their discovery. This is certainly the most important thing you wanted to know.

You are kindly invited to download and try the latest version 1.5.6 and to visit the site www.inmr.net for further news.

Practice

Why integral values can never be accurate? I have prepared a set of 2 examples. One contains a single peak in time domain, the other contains a single lorentzian shape. In each case it's possible to alter the linewidth or even to change the shape to gaussian. As usually the required reader is iNMR, which in turn requires Mac OS X. In the time domain example all points are equal to 1. The shape is given exclusively by the weighting function. I have set it to an exponential equivalent to a 10 Hz broadening. After FT the signal is as large as the spectrum, in other words it never reaches zero. There is a significant baseline offset, which actually also contains to the tails of the lorentzian. In the figure below I have already subtracted this offset (the equivalent of multiplying the first point of the FID by 0.5).

When the integrated region is 100 times the linewidth, the area is still significantly far from the 100%. From the other spectrum I have measured slightly different values, because I have arbitrarily set 100 equal not to the total area, but to the area of a region 2500 times larger than the linewidth.

With your experimental spectra is not the case to define wide integration intervals, like those shown above. Everything works fine because linewidths are comparable and the error is almost constant. Expect to measure lower integrals for wider peaks, even when the relaxation delay is very long.

When the integrated region is 100 times the linewidth, the area is still significantly far from the 100%. From the other spectrum I have measured slightly different values, because I have arbitrarily set 100 equal not to the total area, but to the area of a region 2500 times larger than the linewidth.

| interval/linewidth | area % |

| 10 | 93.6 |

| 20 | 96.8 |

| 100 | 99.4 |

| 250 | 99.7 |

| 500 | 99.9 |

| 5000 | 100 |

With your experimental spectra is not the case to define wide integration intervals, like those shown above. Everything works fine because linewidths are comparable and the error is almost constant. Expect to measure lower integrals for wider peaks, even when the relaxation delay is very long.

Theory

NMR means nuclear magnetic resonance. It' s an analytical technique by which atomic nuclei and, indirectly, their surroundings, are revealed. It's called magnetic because a magnet is used to enhance the (otherwise negligible) energy of the nuclei. It's a resonance phenomenon because the nuclei, when excited by a wave of the right frequency, respond with an analogue wave. The NMR experiment is conceptualized in a rotating coordinate system. For the moment being you just have to pretend it is a plain Cartesian system of coordinates xyz. A radio-frequency pulse tilts the magnetization of the nuclei, initially in the z direction, in the xy plane for detection. Here each nucleus rotates at its own resonance frequency while two detectors sample the total magnetization at regular intervals along the x and y axes. The regular interval is called dwell time. The measured intensities along the x axis are called the real part of the spectrum and intensities along y form the imaginary part. Real and imaginary are just names. They could have been called right and left or red and white and it would have been the same (or better). This is the main difference indeed between an NMR instrument and a hi-fi tuner. It probably serves to justify the price difference. You have to realize that the real and imaginary parts are both true experimental values of the same importance. These intensities are stored on a hard disk in the same time order in which they are sampled (i.e. chronologically). A couple of a real and an imaginary values, collected at the same time, constitute a complex point. iNMR normally displays only the real part of the spectrum. A complex spectrum is like a vector in physics. It can be characterized by its x and y components or by its magnitude (amplitude) and direction. iNMR lets you display the magnitude of the spectrum if you want. The direction of a complex point is called phase. The so called "phase correction" is a process which mixes the real (x) and imaginary (y) components. A radio-frequency wave has frequency, amplitude and phase. Thus complex numbers are the natural choice to describe a RF signal. In an older experimental scheme a single detector measures the magnetization along both axes. In this case the sampling cannot be simultaneous. It is in fact sequential. In this case the spectrum is known as real only. Actually it can (and is) manipulated just as a normal simultaneous, complex, spectrum. A simple ad hoc correction is needed when transforming the spectrum in the "frequency domain".

Let's explain what this last term means. What we have been speaking of up to know is a not very meaningful function of time called FID (free induction decay) (induction is another way to refer to magnetization). It is not very meaningful because all the nuclei resonates at the same time. If there were me and Pavarotti singing together it would be easy for you to discriminate our single contribution to the choir. But you are not trained to discriminate among a collection of atomic nuclei resonating together. The Fourier transformation (FT) is a mathematical tool that separates the contribution of each nucleus by its resonance frequency. The FID is a function of time, while the transformed spectrum is a function of frequency. The FT requires a computer and this is why you need a computer and a software application if you want to do some NMR. At first sight it may seem that the function of frequency should extend between - and +. Actually the sampling theorem states it only has to be calculated in the interval from 0 (included) to Ny (excluded). Ny = Nyquist frequency = sampling rate = reciprocal of the dwell time. Let's take a pause. What's the angle whose sine is 1? My pocket calculator says: 90°. I say: 90°±n360°. Who is right? Both! Coming back to our NMR experiment, suppose a signal is so fast that it rotates by exactly 360° during the dwell time. The two detectors will see it always in the same position, so they will believe it simply doesn't move (it has zero frequency). The same happens with four different signals which rotate by -350°, 10°, 370° and 730° during the dwell time. There is absolutely no way to tell which is which, unless you shorten the dwell time (a common experimental practice). A final case: you have two signals A and B and A moves of 361° respect to B each sampling interval. You will get the impression that A is moving only of 1° each time. In conclusion, the maximum difference in frequency that can be detected is = number of cycles / time interval = 1 / dwell time = Nyquist. q.e.d. In NMR this quantity is called spectral width. All the books report different expressions for the Nyquist frequency and the spectral width. One day we should open a discussion on the subject.

On a purely mathematical basis it doesn't matter how large the actual frequency range you have to record. You can pretend the range starts at zero and extend up to comprise the maximum signal separation. In practice detectors work in the low-frequency range. So you have technical limitations, and this is only the first one. The resonance frequencies today are approaching the GHz. The frame of reference also rotates at a similar frequency, so the apparent frequency is in the range of KHz. With this reduced frequency the dwell time needs to be in the order of milliseconds. The problem is that you need to filter out all other frequencies because they contain nasty noise (didn't I say it was an hi-fi matter?). So we need the rotating frame to move from the ideal world of theory and to become a practical reality. How is the rotating frame accomplished experimentally? A detector receives two signals, one coming from the sample under study and another which is a duplicate of the exciting frequency. The detector actually detects the difference between the two frequencies. To fully exploit the power of the pulse, the transmitter is put at the centre of the spectrum. Signals falling at the left of it appear as negative frequencies. (well, here it is not important if you use the delta or the tau scale and if they are positve or negative; only the concept matters). We have said that the spectrum begins at zero. In fact, if you perform a plain FT, the whole left side would appear shifted by the Nyquist frequency, then to the right of the right side!

The FT and its inverse show a number of interesting properties. The first one predicts that, if you complex-conjugate the FID, the transformed spectrum will be the mirror image of the original spectrum. In fact, if you invert the y component of a vector, you obtain its image across a mirror put along the x axis. Anything rotating counter-clockwise (positive frequency) will appear as rotating clockwise (negative frequency) and vice versa. This mirror image is mathematically called "complex conjugate". Some spectrometers already perform this operation when acquiring. This is another reason (together with sequential acquisition) why spectra coming from different instruments require different processing.

The second property predicts that changing the sign of even points of the FID is equivalent to swapping the left half of the spectrum with the right half. Because they are already swapped and need to be put back in the correct order, you understand this is an useful property. In the beginning of the SwaN-MR era this operation was simply called "swap". A day in which I was sillier than usual I changed the name in "quadrature". A possible explanation is that spectroscopists use to say "I implement quadrature detection" instead of saying "I put the transmitter in the middle of the spectrum". Like conjugation, this operation may have already been performed by the spectrometer during acquisition; in this case don't do it a second time. A third property says that the conjugated FT of a real function is symmetrical. This property is exploited by two techniques known as zero-filling and Hilbert transformation. The exploiting is so indirect and so difficult to explain, that I'll skip the demonstration. Zero-filling means doubling the length of the FID by adding a series of zeroes to its tail. During FT, the information (signal) contained in the imaginary part will fill the empty space. As a final result, you increase the resolution of the spectrum. In fact, the spectral width is already defined by the sampling rate, so adding new points results in increasing their density and the description of details. The Hilbert transformation is the inverse trading: you first zero the imaginary part, then you reconstruct it. Programs present these two operations as different commands and books describe them with different formulae, so most people don't realize that they are two sides of the same coin. There may be cases in which you are forced to zero-fill anyway. It happens that computers prefer to apply the FT only to certain amount of data, precisely to powers of 2. E.g. they like to transform a sequence of 1024 points, but never 1000. In this case 100% of computers will automatically zero-fill to 1024 points without asking your opinion. It's not the case of being fiscal here, I advise you to keep your computer happy.

A fourth property (actually a theorem) says that multiplication in time domain corresponds to convolution in frequency domain. Convolution is crossing two functions. The product equally resembles both parents. Spectroscopists, when they dislike their spectra, use convolutions like breeders cross their cattle. Convolution certainly takes less time than crossing two animals but is still a very long operation in a spectroscopist's perception of time. So it is always preferable to perform a "multiplication in time domain" or, to save three words, "weighting". When you weight you put in practice the Heisenberg uncertainty principle. The longer the apparent life of a signal in time domain, the more resolved it will appear in frequency domain. To reach this goal you multiply the FID with a function which raises in time. At a certain point the true signal is almost completely decayed and the FID only contains noise. When you arrive there it is better to use a function which decays in time in order to reduce the noise. It's the general sort of trading between sensitivity and resolution which makes similar all spectroscopies.

A fifth property says that the total area of the spectrum is equal to the first point in the FID. This property affects both the phase and baseline characteristics of a spectrum. In fact it often happens that phase and baseline distortions are correlated. Now suppose that you have only one signal in your spectrum and that the corresponding magnetization was aligned along the y axis when the first point was sampled. The x (real) part is zero. According to the property, when you FT, half of the signal will be negative for the total area to be zero. Such a spectrum is called a "dispersion" spectrum. Normally an "absorption" spectrum is preferable, because the signal is all positive and narrower and the integral is proportional to the concentration of the chemical species. In the case described the absorption spectrum correspond to the imaginary component. In real-life cases the absorption and dispersion spectra are part in the real and part in the imaginary component. A phase correction separates them and gives the desired absorption spectrum. At last you realize why the NMR signal is recorded in two channels simultaneously! Remember it! The reverse of the coin is that the first point of the spectrum (the one at the transmitter frequency, as explained above) corresponds to the integral of the FID and, because the FID oscillates, to its average position. In theory the latter should be zero so you can decide to raise or lower the FID in order for the average to be exactly zero. In this way you reduce the artifact that the transmitter leaves at the centre of the spectrum. iNMR, by default, does not apply this kind of "DC correction". (DC stays for drift current).

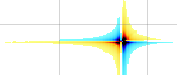

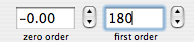

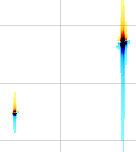

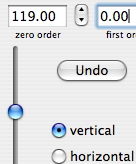

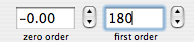

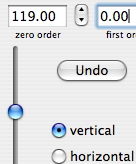

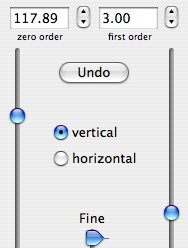

A sixth property says that a time shift corresponds to a linear phase distortion in frequency domain and vice versa. Because you can' t begin acquisition during the exciting pulse (which represents time zero), you normally have to deal with this property. In fact you perform two kinds of correction. The zero-order one, described above, which affects the whole spectrum uniformly, and a first-order one, whose effect varies linearly with frequency. If in turn you want to shift all your frequencies by a non-integer number of points, the best way is to apply a first-order phase change to the FID.

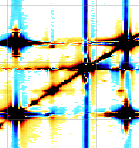

Finally the Fourier transformation shares the general properties of linear functions: it commutes with multiplication by a constant and with addition. Sometimes FT alone is not enough to separate signals. In this cases the acquisition scheme is complicated with the addition of delays and pulses. During a delay, for example, two signal starting with the same phase but rotating at different frequencies lose their phase equality. A pulse has no effect on a signal aligned along its axis but rotates a signal perpendicular (in phase quadrature) to it. So you should not wonder that a suitable combination of pulses and delays can differentiate between signals. A signal is in effect a quantic transition between two energy levels A and B, caused by a RF pulse. A second pulse can move the transition to a third level C. It will be a new transition with a new frequency. This is just to show how many things can be done. In the simplest experiment the FID is a simple function of time f(t). If we introduce a delay d at any point before the acquisition the FID becomes f(d,t). Now if we run many times the experiment, each time with a different value of d, d becomes a new time variable indeed. So the expression for the FID is now f(t1, t2). t2 comes later in the experimental scheme, so it corresponds to the "t" of the simple 1-pulse experiment. What do you have on the hard disk? A rectangular, ordered set of complex points called matrix. The methods used to display it are usually borrowed by geography. If two dimensions are not enough for you, you can add a third or even a fourth one. The dimension with the highest index is said to be directly revealed because switching on the detectors is the last stage in a pulse sequence.

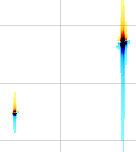

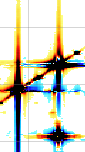

To go from FID(t1,t2) to Spectrum(f1, f2) you need to do everything twice. First weight, zero-fill, FT and phase correct along t2, then weight, zero-fill and phase correct along f1. Finally you can correct the baseline. The only freedom iNMR gives to you outside this scheme is that you can also correct the phase along f2 after the correction along f1. This partial lack of freedom simplifies immensely your work. Now there are good news and bad news. Bad news first. In 1-D spectroscopy you appreciated the need of acquiring the spectrum along two channels in quadrature. The books says it is required in order to put the transmitter in the middle of the spectrum. You know it is false. The true reason is that quadrature detection is needed to see the spectrum in absorption mode. The same holds for the f1 dimension. You need to duplicate everything again. Instead of using an hypercomplex point (which would need a 4D coordinate system to be conceptualised) it is customary to store points in their chronological order. You also have the real component of f1 stored in the odd rows of the matrix and the imaginary component in the even rows. The idea is that, after the spectrum is transformed and in absorption along f2, you can discard the imaginary part, because it only contains dispersion, and merge each odd row with the subsequent. After the merging your points are complex again (and the number of rows is halved). The real part is the same as before while what was the real part of even rows is now the imaginary part. After the final FT the spectrum will be phaseable along f1 (but not along f2). In case you want to perform all phase corrections at the end, do not throw away the imaginary part and process it separately. When you need to correct the phase along f2, you swap the imaginary part along f1 with the imaginary part along f2. Now the good news: iNMR does all the book-keeping for you! You just have to specify if you want to proceed with the minimal amount of data or if you prefer keeping all of it. The scheme outlined above is called Ruben-States. Another scheme exists, called TPPI. Everything was said holds, plus TPPI requires a real FT, plus it already negates even points (you have to remember of not doing it again). Just because two is not the perfect number, someone added a third scheme called States-TPPI. Fortunately it is the simplest solution, in that it requires a plain FT (no "quadrature" like Ruben-States). The most ancient (and probably the most useful) 2D experiment, the COSY experiment, is not phaseable because it is even older than the oldest scheme. So you don't need to bother with all these things. Just switch to magnitude representation. To be honest, processing a COSY experiment is certainly easier than processing a 1D proton spectrum. There is also no need to correct the baseline and less sense in measuring integrals. Another widely used protocol is echo-antiecho. It's slightly more complicated and had the merit of allowing new phase-sensitive experiments, starting with HSQC (hetero-nuclear correlation detected through the hydrogen magnetization).

If this long speech was too complicated for your tastes, it doesn't mean you cannot become an expert in NMR processing. Simply playing with iNMR, by trial and error, observing the effect of each option and pouring a small dose of common sense you can still become a wizard.

Let's explain what this last term means. What we have been speaking of up to know is a not very meaningful function of time called FID (free induction decay) (induction is another way to refer to magnetization). It is not very meaningful because all the nuclei resonates at the same time. If there were me and Pavarotti singing together it would be easy for you to discriminate our single contribution to the choir. But you are not trained to discriminate among a collection of atomic nuclei resonating together. The Fourier transformation (FT) is a mathematical tool that separates the contribution of each nucleus by its resonance frequency. The FID is a function of time, while the transformed spectrum is a function of frequency. The FT requires a computer and this is why you need a computer and a software application if you want to do some NMR. At first sight it may seem that the function of frequency should extend between - and +. Actually the sampling theorem states it only has to be calculated in the interval from 0 (included) to Ny (excluded). Ny = Nyquist frequency = sampling rate = reciprocal of the dwell time. Let's take a pause. What's the angle whose sine is 1? My pocket calculator says: 90°. I say: 90°±n360°. Who is right? Both! Coming back to our NMR experiment, suppose a signal is so fast that it rotates by exactly 360° during the dwell time. The two detectors will see it always in the same position, so they will believe it simply doesn't move (it has zero frequency). The same happens with four different signals which rotate by -350°, 10°, 370° and 730° during the dwell time. There is absolutely no way to tell which is which, unless you shorten the dwell time (a common experimental practice). A final case: you have two signals A and B and A moves of 361° respect to B each sampling interval. You will get the impression that A is moving only of 1° each time. In conclusion, the maximum difference in frequency that can be detected is = number of cycles / time interval = 1 / dwell time = Nyquist. q.e.d. In NMR this quantity is called spectral width. All the books report different expressions for the Nyquist frequency and the spectral width. One day we should open a discussion on the subject.

On a purely mathematical basis it doesn't matter how large the actual frequency range you have to record. You can pretend the range starts at zero and extend up to comprise the maximum signal separation. In practice detectors work in the low-frequency range. So you have technical limitations, and this is only the first one. The resonance frequencies today are approaching the GHz. The frame of reference also rotates at a similar frequency, so the apparent frequency is in the range of KHz. With this reduced frequency the dwell time needs to be in the order of milliseconds. The problem is that you need to filter out all other frequencies because they contain nasty noise (didn't I say it was an hi-fi matter?). So we need the rotating frame to move from the ideal world of theory and to become a practical reality. How is the rotating frame accomplished experimentally? A detector receives two signals, one coming from the sample under study and another which is a duplicate of the exciting frequency. The detector actually detects the difference between the two frequencies. To fully exploit the power of the pulse, the transmitter is put at the centre of the spectrum. Signals falling at the left of it appear as negative frequencies. (well, here it is not important if you use the delta or the tau scale and if they are positve or negative; only the concept matters). We have said that the spectrum begins at zero. In fact, if you perform a plain FT, the whole left side would appear shifted by the Nyquist frequency, then to the right of the right side!

The FT and its inverse show a number of interesting properties. The first one predicts that, if you complex-conjugate the FID, the transformed spectrum will be the mirror image of the original spectrum. In fact, if you invert the y component of a vector, you obtain its image across a mirror put along the x axis. Anything rotating counter-clockwise (positive frequency) will appear as rotating clockwise (negative frequency) and vice versa. This mirror image is mathematically called "complex conjugate". Some spectrometers already perform this operation when acquiring. This is another reason (together with sequential acquisition) why spectra coming from different instruments require different processing.

The second property predicts that changing the sign of even points of the FID is equivalent to swapping the left half of the spectrum with the right half. Because they are already swapped and need to be put back in the correct order, you understand this is an useful property. In the beginning of the SwaN-MR era this operation was simply called "swap". A day in which I was sillier than usual I changed the name in "quadrature". A possible explanation is that spectroscopists use to say "I implement quadrature detection" instead of saying "I put the transmitter in the middle of the spectrum". Like conjugation, this operation may have already been performed by the spectrometer during acquisition; in this case don't do it a second time. A third property says that the conjugated FT of a real function is symmetrical. This property is exploited by two techniques known as zero-filling and Hilbert transformation. The exploiting is so indirect and so difficult to explain, that I'll skip the demonstration. Zero-filling means doubling the length of the FID by adding a series of zeroes to its tail. During FT, the information (signal) contained in the imaginary part will fill the empty space. As a final result, you increase the resolution of the spectrum. In fact, the spectral width is already defined by the sampling rate, so adding new points results in increasing their density and the description of details. The Hilbert transformation is the inverse trading: you first zero the imaginary part, then you reconstruct it. Programs present these two operations as different commands and books describe them with different formulae, so most people don't realize that they are two sides of the same coin. There may be cases in which you are forced to zero-fill anyway. It happens that computers prefer to apply the FT only to certain amount of data, precisely to powers of 2. E.g. they like to transform a sequence of 1024 points, but never 1000. In this case 100% of computers will automatically zero-fill to 1024 points without asking your opinion. It's not the case of being fiscal here, I advise you to keep your computer happy.

A fourth property (actually a theorem) says that multiplication in time domain corresponds to convolution in frequency domain. Convolution is crossing two functions. The product equally resembles both parents. Spectroscopists, when they dislike their spectra, use convolutions like breeders cross their cattle. Convolution certainly takes less time than crossing two animals but is still a very long operation in a spectroscopist's perception of time. So it is always preferable to perform a "multiplication in time domain" or, to save three words, "weighting". When you weight you put in practice the Heisenberg uncertainty principle. The longer the apparent life of a signal in time domain, the more resolved it will appear in frequency domain. To reach this goal you multiply the FID with a function which raises in time. At a certain point the true signal is almost completely decayed and the FID only contains noise. When you arrive there it is better to use a function which decays in time in order to reduce the noise. It's the general sort of trading between sensitivity and resolution which makes similar all spectroscopies.

A fifth property says that the total area of the spectrum is equal to the first point in the FID. This property affects both the phase and baseline characteristics of a spectrum. In fact it often happens that phase and baseline distortions are correlated. Now suppose that you have only one signal in your spectrum and that the corresponding magnetization was aligned along the y axis when the first point was sampled. The x (real) part is zero. According to the property, when you FT, half of the signal will be negative for the total area to be zero. Such a spectrum is called a "dispersion" spectrum. Normally an "absorption" spectrum is preferable, because the signal is all positive and narrower and the integral is proportional to the concentration of the chemical species. In the case described the absorption spectrum correspond to the imaginary component. In real-life cases the absorption and dispersion spectra are part in the real and part in the imaginary component. A phase correction separates them and gives the desired absorption spectrum. At last you realize why the NMR signal is recorded in two channels simultaneously! Remember it! The reverse of the coin is that the first point of the spectrum (the one at the transmitter frequency, as explained above) corresponds to the integral of the FID and, because the FID oscillates, to its average position. In theory the latter should be zero so you can decide to raise or lower the FID in order for the average to be exactly zero. In this way you reduce the artifact that the transmitter leaves at the centre of the spectrum. iNMR, by default, does not apply this kind of "DC correction". (DC stays for drift current).

A sixth property says that a time shift corresponds to a linear phase distortion in frequency domain and vice versa. Because you can' t begin acquisition during the exciting pulse (which represents time zero), you normally have to deal with this property. In fact you perform two kinds of correction. The zero-order one, described above, which affects the whole spectrum uniformly, and a first-order one, whose effect varies linearly with frequency. If in turn you want to shift all your frequencies by a non-integer number of points, the best way is to apply a first-order phase change to the FID.

Finally the Fourier transformation shares the general properties of linear functions: it commutes with multiplication by a constant and with addition. Sometimes FT alone is not enough to separate signals. In this cases the acquisition scheme is complicated with the addition of delays and pulses. During a delay, for example, two signal starting with the same phase but rotating at different frequencies lose their phase equality. A pulse has no effect on a signal aligned along its axis but rotates a signal perpendicular (in phase quadrature) to it. So you should not wonder that a suitable combination of pulses and delays can differentiate between signals. A signal is in effect a quantic transition between two energy levels A and B, caused by a RF pulse. A second pulse can move the transition to a third level C. It will be a new transition with a new frequency. This is just to show how many things can be done. In the simplest experiment the FID is a simple function of time f(t). If we introduce a delay d at any point before the acquisition the FID becomes f(d,t). Now if we run many times the experiment, each time with a different value of d, d becomes a new time variable indeed. So the expression for the FID is now f(t1, t2). t2 comes later in the experimental scheme, so it corresponds to the "t" of the simple 1-pulse experiment. What do you have on the hard disk? A rectangular, ordered set of complex points called matrix. The methods used to display it are usually borrowed by geography. If two dimensions are not enough for you, you can add a third or even a fourth one. The dimension with the highest index is said to be directly revealed because switching on the detectors is the last stage in a pulse sequence.

To go from FID(t1,t2) to Spectrum(f1, f2) you need to do everything twice. First weight, zero-fill, FT and phase correct along t2, then weight, zero-fill and phase correct along f1. Finally you can correct the baseline. The only freedom iNMR gives to you outside this scheme is that you can also correct the phase along f2 after the correction along f1. This partial lack of freedom simplifies immensely your work. Now there are good news and bad news. Bad news first. In 1-D spectroscopy you appreciated the need of acquiring the spectrum along two channels in quadrature. The books says it is required in order to put the transmitter in the middle of the spectrum. You know it is false. The true reason is that quadrature detection is needed to see the spectrum in absorption mode. The same holds for the f1 dimension. You need to duplicate everything again. Instead of using an hypercomplex point (which would need a 4D coordinate system to be conceptualised) it is customary to store points in their chronological order. You also have the real component of f1 stored in the odd rows of the matrix and the imaginary component in the even rows. The idea is that, after the spectrum is transformed and in absorption along f2, you can discard the imaginary part, because it only contains dispersion, and merge each odd row with the subsequent. After the merging your points are complex again (and the number of rows is halved). The real part is the same as before while what was the real part of even rows is now the imaginary part. After the final FT the spectrum will be phaseable along f1 (but not along f2). In case you want to perform all phase corrections at the end, do not throw away the imaginary part and process it separately. When you need to correct the phase along f2, you swap the imaginary part along f1 with the imaginary part along f2. Now the good news: iNMR does all the book-keeping for you! You just have to specify if you want to proceed with the minimal amount of data or if you prefer keeping all of it. The scheme outlined above is called Ruben-States. Another scheme exists, called TPPI. Everything was said holds, plus TPPI requires a real FT, plus it already negates even points (you have to remember of not doing it again). Just because two is not the perfect number, someone added a third scheme called States-TPPI. Fortunately it is the simplest solution, in that it requires a plain FT (no "quadrature" like Ruben-States). The most ancient (and probably the most useful) 2D experiment, the COSY experiment, is not phaseable because it is even older than the oldest scheme. So you don't need to bother with all these things. Just switch to magnitude representation. To be honest, processing a COSY experiment is certainly easier than processing a 1D proton spectrum. There is also no need to correct the baseline and less sense in measuring integrals. Another widely used protocol is echo-antiecho. It's slightly more complicated and had the merit of allowing new phase-sensitive experiments, starting with HSQC (hetero-nuclear correlation detected through the hydrogen magnetization).

If this long speech was too complicated for your tastes, it doesn't mean you cannot become an expert in NMR processing. Simply playing with iNMR, by trial and error, observing the effect of each option and pouring a small dose of common sense you can still become a wizard.

Saturday, 18 November 2006

Interface